Organizing for Effectiveness

The Air Force is improving its Decision Support M&S governance. The goals are effectiveness and efficiency:

- Understand how well the analytic M&S enterprise is functioning. Particularly, ensure that the analytic enterprise is delivering quality results in support of decision making.

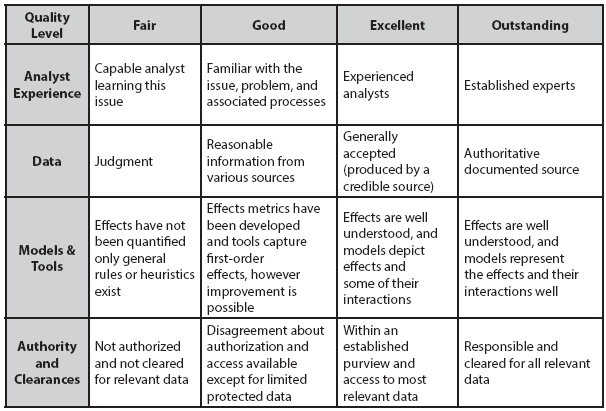

- Enable efficiency by aligning the many analytic organizations conducting multiple levels of M&S into a cohesive and coherent whole, particularly in sharing models and data along with expertise and results.Several initiatives are laying the foundation for achieving these goals.The Air Force conducted a rapid improvement event on M&S governance in 2010. The subsequent decision was that the Air Force would organize our M&S under the Tri-Chairs for virtual simulators (AF/A3), acquisition (SAF/AQ), and decision support (AF/A9). The AF/A3 focus is on the hardware-intensive simulators, whereas AF/A9 focuses on the analyst-intensive models and simulations. The acquisition community cooperates with both of these pillars for applications that affect their work. This article focuses on Air Force initiatives for analytic M&S for decision support and acquisition.In 2012, when the Office of the Secretary of Defense (OSD) directed the DoD Components to reduce duplicative studies, the Air Force established a Studies Governance Board. This board initiated a study registry program to reduce duplication in studies. The initial focus was on contracted studies because the contracting actions make those study efforts easier to identify. I continue to recommend that we also include organic studies in the registry. Currently, the study approval process requires a literature review to establish what has been accomplished as a starting point for any new study. Additionally, we use the study registry to ensure that completed studies are archived in the Defense Technical Information Center (DTIC). One contentious aspect has been the definition of a study, which is why I earlier proposed a definition to advance this discussion. Currently, the Studies Governance Board is broadening into other policy aspects to improve our study process.Beginning in 2010, the Air Force implemented a risk assessment framework that evaluates risk against any activity in terms of its plan, particularly cost, schedule, and performance. For each metric, success and failure points are identified and the expected achievement is evaluated to determine the risk (Gallagher, MacKenzie, et al. 2015). The resulting risk assessments align with the Joint Staff risk schema. The Air Force Requirements Oversight Council (AFROC) reviews risk assessments in this prescribed format for each proposed requirement.In 2014, the Air Force expanded the application of this risk assessment framework to each mission area within the Air Force under the program named the Comprehensive Core Capability Risk Assessment Framework (C3RAF). The Air Force organizes around 12 core functions, which are further subdivided into 45 core capabilities. The Chief of Staff of the Air Force directed that a set of metrics be developed for each of the core capabilities. As this process continues to evolve, establishing a standard set of metrics will enable the Air Force to integrate better information from operations, exercises, experiments, technology demonstrations, analyses, and wargames. With established metrics, integration of analytic results should be easier. As an analogy, performance results should be more like a book or movie series with same cast of characters in different settings, rather than completely unrelated stories. Furthermore, standard metrics will show changes, hopefully improvements, over time.The Air Force has also started assessing its organic analytic capability and capacity, which is distributed throughout the Air Force. Typically, more military and civilian analysts are assigned within larger organizations, such as major command headquarters and the Air Staff. To evaluate capability of these dispersed analytic organizations, we need criteria. For an organization to accomplish analytic studies in any particular area requires four aspects:

- Qualified analysts,

- Relevant data,

- Appropriate models, simulations and tools, and

- Authority, including clearances.

We defined analytic capability ratings based on these four aspects as shown in Table 3. We evaluate our ability for each of the analysis levels hierarchy (see Figure 2) and across the core capabilities used in C3RAF. Our initial survey indicates that we need to improve our space, nuclear, and cyber analytic capability within the Air Force. We are using these insights to shape our modeling developments to close the identified analytic capability gaps.

- Table 3. Organization Analytic Capability RatingsAnother initiative is to revamp the Air Force Standard Analytic Toolkit (AFSAT), which lists accreditation of significant and enduring analytic models. The accreditation requires sufficient M&S support including documentation, validation and testing, training, and user groups. After our analysis community conducts a review and approves a model, AF/A9 lists that model in the AFSAT. In the past, the AFSAT had a single standard so that models were either in or out, with relatively few models actually registered in AFSAT. Moreover, as models develop and mature, their support may improve or become outdated. Therefore, AF/A9 is revising the AFSAT to be more flexible and better promote visibility and transparency of all M&S assets. We contend this added information will make it easier for everyone; model managers can plan their progress, and users will understand the current evaluation of models. Another challenge for the AFSAT is the evolution from stand-alone models to simulation frameworks or environments in which many system models, like an F-22, may be incorporated into an application, such as an integrated air defense network. Both the system models and the applications should be vetted. AF/A9 currently intends to incorporate the applications, and not the frameworks, into the AFSAT.A separate topic is preferred software applications. The Air Force perceives several advantages to establishing preferred software applications for analysis. First, training would be more widely applicable as analysts transition between organizations. Second, analysts could collaborate better and move between projects easier. Third, related applications could be interfaced more easily. We use “preferred” vice “required” software applications because we readily admit that some applications will be better performed with another analysis package.The Analytic M&S policy initiatives are an important piece of AF trade space; however, the other important part is improving our analytic capability with enhanced analytic tools.