The threat of manipulated media has steadily increased as automated manipulation technologies become more accessible and social media continues to provide a ripe environment for viral content sharing.

The speed, scale, and breadth at which massive disinformation campaigns can unfold require computational defenses and automated algorithms to help humans discern what content is real and what’s been manipulated or synthesized, why, and how.

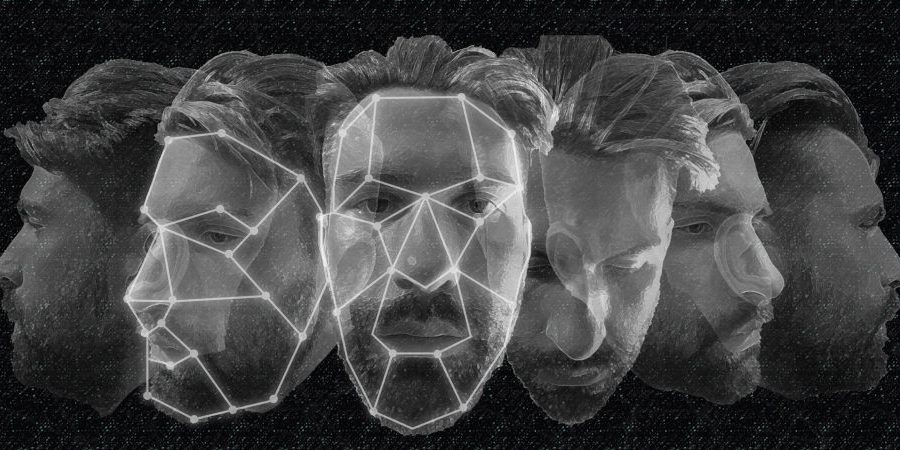

Through the Semantic Forensics (SemaFor) program, previously the Media Forensics program, DARPA’s research investments in detecting, attributing, and characterizing manipulated and synthesized media, known as deepfakes, have resulted in hundreds of analytics and methods that can help organizations and individuals protect themselves against the multitude of threats of manipulated media.