Summary

When performing defense system analysis with simulation models, a great deal of time and effort is expended creating representations of real-world scenarios in U.S. Department of Defense (DoD) simulation tools. However, once these models have been created and validated, analysts rarely retrieve all the knowledge and insights that the models may yield and are limited to simple explorations because they do not have the time and training to perform more complex analyses manually. Additionally, they do not have software integrated with their simulation tools to automate these analyses and retrieve all the knowledge and insights available from their models.

Simple, manual explorations are inefficient in their use of computing resources and often ineffective in providing the best answers to analyst questions. To derive the greatest benefit from a simulation model, analysts should apply optimization and statistical analysis techniques. Combining these techniques and using available simulation optimization and analysis tools can provide answers to these essential questions and key insights for decision-makers. More importantly, the organizational return on investment from simulation studies increases, which builds stakeholder confidence. Tools like these can also be used for model verification and validation.

Introduction

Simulation and optimization are two powerful methodologies widely used across the DoD [1–4]. Simulations are used to understand complex system behavior at multiple levels of fidelity. For example, simulation can be used to perform detailed engineering, design, and testing of individual weapons system components; examine the interaction of components in a single advanced weapon system such as a fighter aircraft or nuclear submarine; and analyze tactical engagements between weapons systems.

Optimization provides a powerful way to determine the best option among many options. For example, military analysts use optimization to maximize the amount of fuel and munitions delivered to an area of operations using the least number of ships and cargo aircraft; determine the best allocation of dollars to minimize the risk of failure in a future war; assign military personnel to bases in a way that maximizes personal preferences and professional development; and allocate blue force weapons to red force targets to maximize the probability of damage while minimizing collateral damage. When resources are limited and mission effectiveness is paramount, optimization offers military decision-makers keen insights and enables them to make the best choices.

The core challenge in simulation optimization is finding the “best options” within environments too complex or uncertain for traditional optimization techniques. Due to their ability to handle these unpredictable factors, simulations are often the only way to model such problems. However, this creates a dilemma—simulation models become necessary because traditional optimization methods fail under these conditions. The very complexity built into the simulation model makes finding optimal solutions a daunting task. Until recently, no search process was sophisticated enough to bridge this gap between the power of simulation and the structured goal-finding nature of optimization. In short, no type of search process exists that can effectively integrate simulation and optimization. The same shortcoming is also encountered in settings outside of simulation where complex (realistic) models cannot be analyzed using traditional “closed form” optimization tools like mathematical programming.

Recent developments are changing this picture. Advances in metaheuristics—the domain of optimization that incorporates artificial intelligence and analogs to physical, biological, or evolutionary processes—have led to creating a new approach that successfully integrates simulation and optimization. As a result, analysts can get the best benefits from their simulation models.

Organizations may fail to take full advantage of their simulation models. Even though large amounts of time and money are invested in creating a simulation tool and populating it with validated data, a large part of the valuable knowledge that the model may yield is generally overlooked. Simulation analysts who can access such knowledge are exceedingly valuable to their organization and become highly sought-after resources. Combining optimization and statistical analysis techniques with a simulation model is key to unlocking this knowledge. Optimization techniques can be used to execute a simulation model many times, varying the input parameter values, to determine the best input values to achieve desired system outputs. The results of these simulation runs can then be explored with statistical techniques to better understand the system modeled by the simulation. Essential optimization and analysis questions that can be answered for simulation models by combining these techniques include the following:

- Optimization

- What combinations of input parameters lead to the best and worst performance of the system?

- What are the best tradeoffs between multiple competing objectives?

- Analysis

- Which input parameters have the greatest influence on the system being modeled, and which have the least?

- Are there good or bad regions of the input parameter space that can be defined by a subset of input parameters with restricted ranges?

- Are some areas of the parameter trade space more robust to parameter variation than others?

To derive the greatest benefit from a simulation model, an analyst should apply optimization and statistical analysis techniques. Combining these techniques can provide answers to these essential questions and key insights for decision-makers. More importantly, they increase the organizational return on investment from their analysts and simulation models, such as providing a range of force structure capacity (size) options.

In this article, some of the most relevant approaches developed for optimizing simulated systems are summarized. The metaheuristic black-box approach that leads practical applications and relevant details of how this approach has been implemented and used in commercial software is provided next. As a concrete example, some of the mathematics and logic behind a generic simulation optimization software engine are described. Lastly, some use cases that analysts might encounter are presented, and how using a simulation optimization and analysis tool integrated with their simulation model can lighten their workload and lead to better study results is discussed.

Optimization and Statistical Analysis in Commercial Simulation Packages

Over the past two decades, optimization tools in commercial simulation packages have become widespread and relatively easy to use, even if not all practitioners exploit them. Commercial simulation packages also have analysis tools that explore the variability uncovered through simulation replications (or Monte Carlo runs) for a single set of input parameters. However, the analysis of all simulation runs resulting from an optimization run is less commonly available, at least in an automated, easy-to-digest way.

The underlying statistical techniques discussed in this article are not new. However, in many tools today, to perform variable sensitivity and good and bad region analysis across simulation runs executed with different combinations of input parameter changes, analysts must use multiple tools or perform the simulations and then piece together the results of various statistical techniques. Therefore, these types of valuable simulation analyses are done infrequently and often performed only by technical consultants and advanced analysts. To perform them, users of discrete event simulation packages export their simulation results and then use specialized statistical tools like JMP or SPSS or write code in languages like R or Python for analysis. Users of spreadsheet-based Monte Carlo simulations have more statistical analysis tools at their disposal, but even for these analysts, gaining insights across all simulation runs is not an automated process.

The critical goals of identifying good and bad regions of a parameter trade space and discovering robust solutions are sometimes pursued by more advanced analysts through generating a response surface approximation by coupling design of experiments with simulation. This approximate response surface is then explored through various stochastic optimization techniques [5]. Such an approach generally relies on moving from tool to tool for the different steps in the process—generating the design of experiments, executing the simulations, and performing the stochastic optimization. This type of process has the conspicuous shortcoming of frequently oversimplifying complex response surfaces, which can entail a costly loss of valuable insights.

Classical Approaches for Simulation Optimization

Fu [6] identifies the following four main approaches for optimizing simulations:

- Stochastic approximation (gradient-based approaches)

- Sequential response surface methodology

- Random search

- Sample path optimization (also known as stochastic counterpart)

Stochastic approximation algorithms attempt to mimic the gradient search method used in deterministic optimization. The procedures based on this methodology must estimate the gradient of the objective function to determine a search direction. Stochastic approximation targets continuous variable problems because of its close relationship with the steepest descent gradient search. However, this methodology has been applied to discrete problems [7].

Sequential response surface methodology is based on the principle of building local metamodels. The “local response surface” is used to determine a search strategy (e.g., moving in the estimated gradient direction), and the process is repeated. In other words, the metamodels do not attempt to characterize the response surface for the entire solution space but rather concentrate on the search’s local area.

A random search method moves through the solution space by randomly selecting a point from the current point’s neighborhood. This implies that a neighborhood must be defined as part of developing a random search algorithm. Random search has been applied mainly to discrete problems, and its appeal is based on existing theoretical convergence proofs. Unfortunately, these theoretical convergence results mean little in practice where it is more important to find high-quality solutions within a reasonable length of time than to guarantee optimum convergence in an infinite number of steps.

Sample path optimization is a methodology that exploits the knowledge and experience developed for deterministic continuous optimization problems. The idea is to optimize a deterministic function that is based on n random variables, where n is the size of the sample path. In the simulation context, the method of common random numbers is used to provide the same sample path to calculate the response over different values of the input factors. Sample path optimization owes its name to the fact that the estimated optimal solution that it finds is based on a deterministic function built with one sample path obtained with a simulation model. Generally, n needs to be large for the approximating optimization problem to be close to the original optimization problem [8].

Leading commercial simulation software employs metaheuristics as the methodology of choice to provide optimization capabilities to their analysts. This approach to simulation optimization is explored in the next section.

Simulation Optimization Approach With Metaheuristics

Metaheuristics provide a way of considerably improving the performance of simple heuristic procedures. The search strategies proposed by metaheuristic methodologies result in iterative procedures that can explore solution spaces beyond the solution resulting from applying a simple heuristic. For example, genetic algorithms (GAs) and scatter search (SS) are population-based metaheuristics designed to operate on a set of solutions maintained from iteration to iteration. On the other hand, metaheuristics like simulated annealing and tabu search (TS) typically maintain only one solution by applying mechanisms to transform the current solution into a new one. Metaheuristics have been developed to solve complex optimization problems in many areas, with combinatorial optimization being one of the most fruitful. Very efficient procedures can be achieved by relying on context information, i.e., by taking advantage of specific information about the problem. The solution approach may be viewed as the result of adapting metaheuristic strategies to specific optimization problems. In these cases, there is no separation between the solution procedure and the model that represents the complex system.

Metaheuristics can be used to create solution procedures that are context independent, i.e., procedures capable of tackling several problem classes and not using specific information from the problem to customize the search. The original GA designs were based on this paradigm, where solutions to all problems were represented as a string of zeros and ones [9]. The advantage of this design is that the same solver can be used to solve a wide variety of problems because the solver uses strategies to manipulate the string of zeros and ones and a decoder is used to translate the string into a solution to the problem under consideration. The obvious disadvantage is that the solutions found by context-independent solvers might be inferior to those of specialized procedures when applying the same amount of computer effort (e.g., search time). Solvers that do not use context information are referred to as general-purpose or “black box” optimizers.

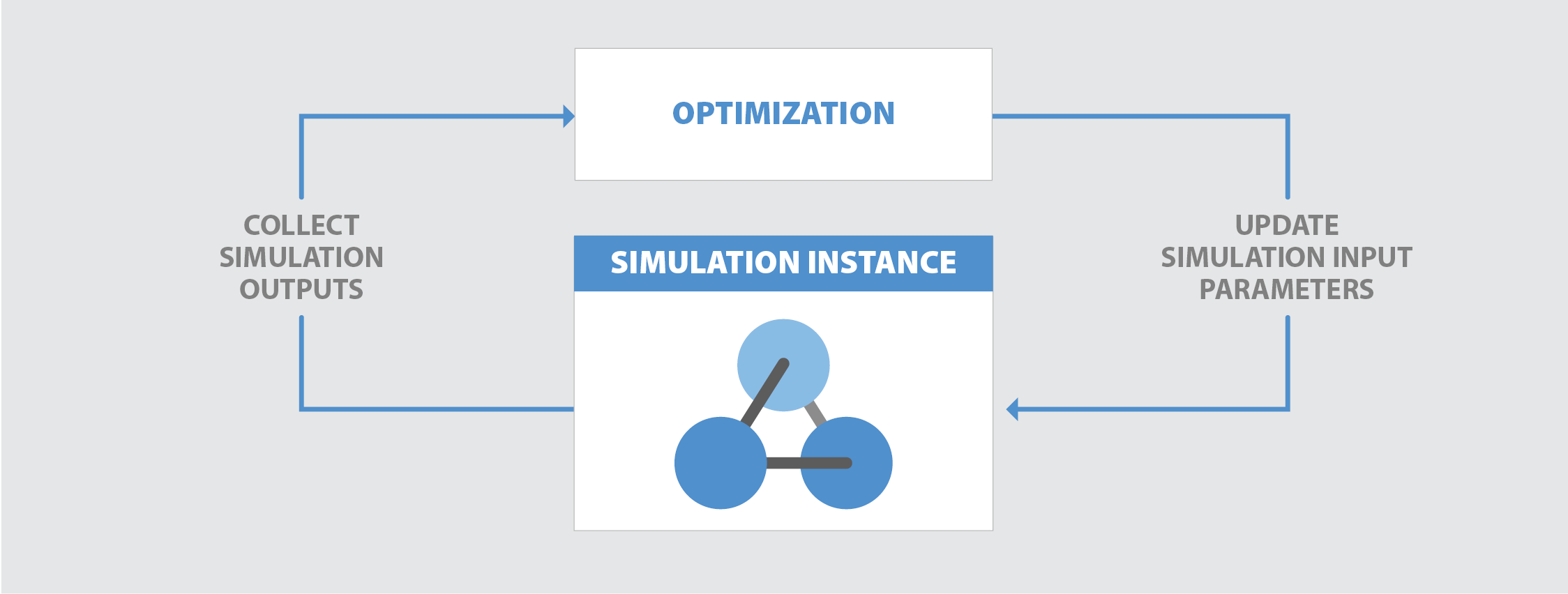

Figure 1 shows the black box approach to simulation optimization favored by procedures based on metaheuristic methodology. In this approach, the metaheuristic optimizer (labeled as “optimization”) chooses a set of values for the input parameters (i.e., factors or decision variables) and uses the responses generated by the simulation model or instance to make decisions for selecting the next trial solution.

Figure 1. Black Box Approach to Simulation Optimization (Source: OptTek Systems).

One of the main design considerations when developing a general-purpose optimizer is which solution representation to employ. This representation is used to establish the communication between the optimizer and the simulation (which is the abstraction of the complex system). As previously mentioned, classical GAs used binary strings to represent solutions. This representation is not particularly convenient in some instances like when a natural solution representation is a sequence of numbers, as in the case of permutation problems. One of the most flexible representations is an n-dimensional vector, where each component may be a continuous or integer bounded variable. This representation can be used in a wide range of applications, which includes all those problems that can be formulated as mathematical programs.

SS

SS is a population-based metaheuristic for optimization. It has been applied to problems with continuous and discrete variables and with one or multiple objectives. The success of SS as an optimization technique is well documented in a constantly growing number of journal articles, book chapters [10–12], and a book [13]. SS consists of the following five phases:

- Diversification Generation

- Improvement

- Reference Set Update

- Subset Generation

- Solution Combination

The Diversification Generation phase is used to generate a set of diverse solutions that are the basis for initializing the search. The Improvement phase transforms solutions to improve quality (typically measured by the objective function value) or feasibility (typically measured by some degree of constraint violation). The Reference Set Update phase refers to the process of building and maintaining a set of solutions that are combined in the main iterative loop of any SS implementation. The Subset Generation phase produces subsets of reference solutions which become the input to the combination method. The Solution Combination phase uses the output from the subset generation method to create new trial solutions. New trial solutions are the results of combining two or more reference solutions.

Extensions of the basic SS framework can be created to take advantage of additional metaheuristic search strategies, such as the memory-based structures of TSs. There are significant differences between classical GAs and SSs. While classical GAs rely heavily on randomization and limiting operations to create new solutions (e.g., one-point crossover on binary strings), SS employs strategic choices and memory, along with structured combinations of solutions, to create new solutions. SS explicitly encourages the use of additional heuristics to process selected reference points in search of improved solutions. This is especially advantageous in settings where heuristics that exploit the problem structure can either be developed or are already available.

Optimization Engines

Many commercial and open-source simulation optimization engines exist. These engines often implement a composite of prediction and optimization technologies to tackle complex problems. They are particularly well-suited for scenarios where evaluating the objective function of the problem is computationally expensive. These engines utilize prediction models to help guide the search and estimate objective function values before solutions are evaluated. Commercial examples include OptQuest, OptDef, Simulink Design Optimization, and Hexaly. Open-source examples include the Python libraries ParMOO and RPFOpt.

Presented in this article are examples and use cases of a commercial solution that implements SS in the simulation optimization engine. This solution has been built under the following assumptions:

- A computationally expensive black box is used to evaluate the objective function of the optimization problem being solved.

- Prediction models within the engine have the dual purpose of assisting in establishing search directions and estimating the value of the objective function before solutions are processed by the objective function evaluator.

Prediction Technologies

Optimization engines often include multivariate linear regression modules to assess the linearity of unknown objective functions. If a reasonably accurate linear approximation can be obtained, this module may help filter out trial solutions unlikely to yield improvements before they are submitted for full evaluation, thus saving computational resources.

Neural networks are another prediction technology used in some optimization engines. These networks can be trained on already evaluated solutions to predict inferior trial solutions as well as suggest promising, high-quality solutions for subsequent evaluation.

Optimization Engine Capabilities and Practical Implications

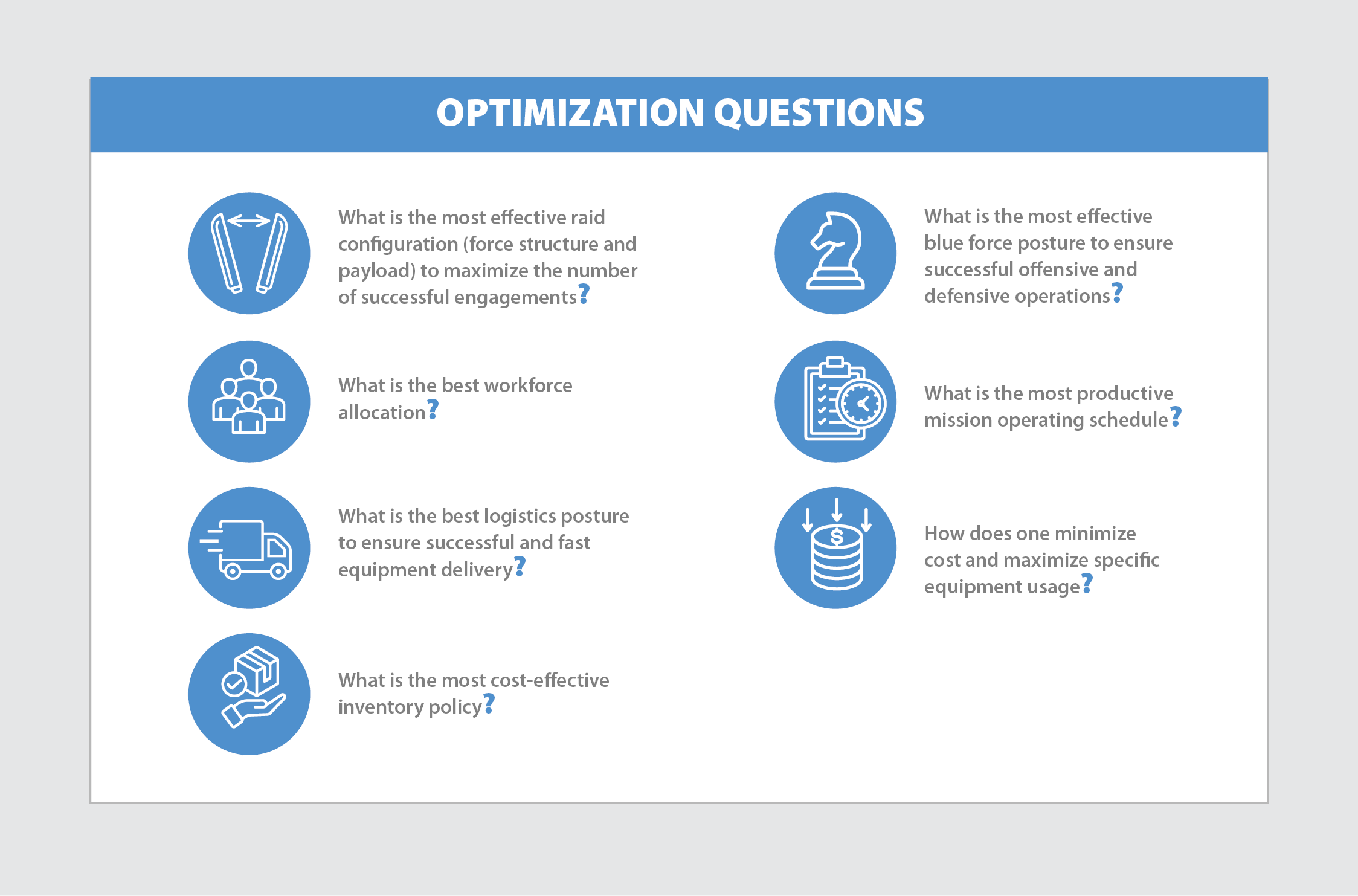

Optimization engines have the potential to replace manual trial-and-error or basic parametric search methods, providing a more efficient way to identify promising decisions within a simulated or modeled domain. This is particularly valuable in defense simulations where analysts often lack tools to guide the selection of alternatives that yield optimal decisions. Figure 2 lists some optimization questions that are relevant to defense analysts.

Figure 2. Optimization Questions for Defense Analysts (Source: gravisio, Uniconlabs, Pure Template, Pexelpy, Iconbunny, and oksanavectorart Canva).

Efficiently answering these questions often requires evaluating a massive number of scenarios through simulation or modeling tools. Optimization engines can automate the search for the best solutions. They enable decision-makers to define constraints, such as the following:

- Ranges of key parameters

- Budget limitations

- Asset capacities

- Acceptable minimum and maximum output values

- Limits on resources used

- Links between components or subsystems

The optimization engine strategically explores options within these constraints and then determines the strategic options investigated under its guidance, which it successively passes to the simulation or technical model for evaluation. The resulting search isolates scenarios that yield the highest quality outcomes for provided objectives, according to the criteria selected by the decision-maker.

Use Case Examples

When coupled with appropriate defense simulation models, a simulation optimization and analysis tool enables optimization in various real-world scenarios, such as developing and refining concepts of operation, optimizing air defense configurations, maximizing satellite coverage, performing cybersecurity vulnerability assessments, and launching and deploying hypersonic weapons.

- Optimal Blue Response. Figure 3 shows a notional Advanced Framework for Simulation, Integration, and Modeling (AFSIM) scenario. Incoming blue forces attempt to hit all red targets. In this case, an analyst’s objective would be to maximize the number of hits while minimizing the number of blue aircraft used. Using the least number of blue forces has the added benefit of cost savings while still achieving the mission objective. A typical optimization setup would include varying parameters such as weapon type (categorical variable type), number of weapons (integer variable type), and the amount of time each aircraft has on a target (integer variable type). Running the simulation optimization software utilizes the metaheuristic methodologies described earlier to explore the space and find the optimal response (i.e., reach the analyst’s objective).

- Maximal Satellite Coverage. An analyst may need to optimize satellite target coverage (i.e., swath). In this case, the objective could be finding the optimal satellite configuration to get the best coverage for high-priority targets/areas. An optimization setup could include varying spacecraft orbital parameters and system configuration (e.g., varying orbits, number, and type of spacecraft) to reach the objective. The analyst can also specify multiple objectives that would balance the best satellite configuration with cost (perhaps an additional variable would be fuel/energy amount). Utilizing multiple objectives allows the analyst to make data-driven decisions that best meet mission needs.

- Cybersecurity Optimization and Analysis. An analyst may want to test the limits of the information technology system by conducting vulnerability assessments. Unlike the Figure 3 scenario, which has blue forces on the offensive, the cybersecurity realm focuses on a defensive posture. Variable parameters may include number and type of servers that are part of the system architecture. It may also include known speed of response to a detected threat. If there is a cyberattack on the system, the analyst can optimize the solution to minimize loss of function and duration of effect against it. With simulation optimization, the analyst can explore scenarios in the cyber kill chain that are the most detrimental and identify key components that must be protected at all costs.

Figure 3. Notional AFSIM Scenario (Source: OptTek Systems, Billion Photos [Canva]).

Conclusions

The fundamental principles of simulation optimization, from established research approaches to the metaheuristic strategies common in commercial applications, have been explored. Key implementation considerations, such as solution representation, metamodel utilization, and constraint formulation, were highlighted.

The synergy between simulation and optimization unlocks a level of solution quality far beyond manual “what-if” analysis, especially when the number of possible scenarios is vast. An overview of a commercial solution’s optimization engine was provided, and the potential of simulation optimization tools to tackle complex defense-related problems when paired with simulation models was demonstrated.

Simulation optimization remains a vibrant field of research and development. Its versatility across diverse applications and the significant benefits it offers ensure continued advancements. Simulation optimization tools provide analysts with powerful resources to analyze complex systems and make data-driven decisions that optimize project and mission objectives. There is still much to learn and discover about how to optimize simulated systems from the theoretical and practical points of view. The rich variety of practical applications and the dramatic gains already achieved by simulation optimization ensure that this area will provide an intensive focus for study and a growing source of practical advances in the future. Simulation optimization software and tools can provide great support to analysts as they explore their data and achieve project/mission objectives.

References

- Boginski, V., E. L. Pasiliao, and S. Shen. “Special Issue on Optimization in Military Applications.” Optimization Letters, vol. 9, no. 8, pp. 1475–1476, 2015.

- Dirik, N., S. N. Hall, and J. T. Moore. “Maximizing Strike Aircraft Planning Efficiency for a Given Class of Ground Targets.” Optimization Letters, vol. 9, no. 8, pp. 1729–1748, 2015.

- Kannon, T. E., S. G. Nurre, B. J. Lunday, and R. R. Hill. “The Aircraft Routing Problem With Refueling.” Optimization Letters, vol. 9, no. 8, pp. 1609–1624, 2015.

- Hill, R. R., J. O. Miller, and G. A. McIntyre. “Simulation Analysis: Applications of Discrete Event Simulation Modeling to Military Problems.” Winter Simulation Conference Proceedings, Arlington, VA, IEEE Computer Society, 2001.

- Samuelson, D. “When Close Is Better Than Optimal.” ORMS-Today, vol. 37, no. 6, pp. 144–152, 2010.

- Fu, M. “Optimization for Simulation: Theory and Practice.” INFORMS Journal on Computing, vol. 14, no. 3, pp. 192–215, 2002.

- Gerencser, L., S. D. Hill, and Z. Vago. “Optimization Over Discrete Sets via SPSA.” Proceedings of the 38th IEEE Conference on Decision and Control, Phoenix, AZ, vol. 2, pp. 1791–1795, 1999.

- Andradottir, S. “A Review of Simulation Optimization Techniques.” Proceedings of the 1998 Winter Simulation Conference, D. J. Medeiros, E. F. Watson, J. S. Carson, and M. S. Manivannan (editors), pp. 151–158, 1998.

- Katoch, S., S. S. Chauhan, and V. Kumar. “A Review on Genetic Algorithm: Past, Present, and Future.” Multimedia Tools and Applications, vol. 80, pp. 8091–8126, https://doi.org/10.1007/s11042-020-10139-6, 2021.

- Glover, F., M. Laguna, and R. Martí. Scatter Search: Advances in Evolutionary Computation: Theory and Applications, New York, NY: Springer-Verlag, 2003.

- Glover, F., M. Laguna, and R. Martí. Scatter Search and Path Relinking: Advances and Applications: Handbook of Metaheuristics, Boston, MA: Kluwer Academic Publishers, 2003.

- Glover, F., M. Laguna, and R. Martí. “New Ideas and Applications of Scatter Search and Path Relinking.” New Optimization Techniques in Engineering, Berlin, Germany: Springer, 2004.

- Laguna, M., and R. Martí. Scatter Search: Methodology and Implementations in C. Boston, MA: Kluwer Academic Publishers, 2003.

Biographies

Jose Ramirez is vice president of government services at OptTek Systems, Inc. He served as the Warfighting Analysis Division Chief (Colonel) in the Joint Staff J-8, where he led, managed, and provided analytical guidance to a 40-personnel team of analytical modelers, national defense strategists, and cybersecurity personnel. He has guided campaign-level, state-of-the-art, discrete-event modeling and simulation and data analytics supporting the Chairman of the Joint Chiefs of Staff and Combatant Commanders. He has strategized and conducted operational assessments of capabilities vs. peer adversaries for insights on future equipment modernization investment options and implemented advanced data analytics technology on the DoD’s global munitions requirements process. Dr. Ramirez holds a B.S. in civil engineering from the University of Notre Dame, an M.S. in government information leadership from the National Defense University’s College of Information and Cyberspace, an M.S.E. in operations research and industrial engineering from the University of Texas at Austin, and a Ph.D. in operations management (operations research) from the University of Colorado at Boulder.

Benjamin Thengvall is chief operating officer at OptTek Systems, Inc. He is an expert in mathematical modeling, real-time optimization software and services, transportation and scheduling problems, agent-based and discrete-event simulation, and simulation optimization and analysis. He has spent his career providing innovative software solutions to complex real-world problems through mathematical modeling, simulation, and metaheuristic techniques in commercial and government spheres. Dr. Thengvall holds a B.S. in mathematics from the University of Nebraska-Lincoln and an M.S.E. and Ph.D. in operations research and industrial engineering from the University of Texas at Austin.