Introduction

In the digital age, the cyber domain has become an intricate network of systems and interactions that underpin modern society. Sim2Real techniques, originally developed with notable success in domains such as robotics and autonomous driving, have gained recognition for their remarkable ability to bridge the gap between simulated environments and real-world applications. While their primary applications have thrived in these domains, their potential implications and applications within the broader cyber domain remain relatively unexplored. This article examines the emerging intersection of Sim2Real techniques and the cyber realm, exploring their challenges, potential applications, and significance in enhancing our understanding of this complex landscape.

Sim2Real: Concepts and Methodologies

Sim2Real, an abbreviation for “Simulation to Reality,” is a transformative approach that addresses the challenge of transferring knowledge acquired in simulated environments to real-world applications [1]. It plays a vital role in various domains by leveraging simulated environments to train and prepare for real-world scenarios. While Sim2Real is commonly associated with machine learning (ML), its applications extend beyond this field, offering opportunities for enhanced learning, testing, and preparation in diverse scenarios. This section explores Sim2Real’s foundational concepts and methodologies, which collectively enable the seamless transfer of knowledge from simulation to practical applications, a principle that has wide-ranging implications, including potential applications within the cyber domain.

The Foundation of Sim2Real

At the heart of Sim2Real lies the concept of training ML models in simulated environments, where data is abundant, diverse, and controllable. This approach stands in contrast to traditional methods, which often require training models directly in real-world settings, where data collection can be expensive, limited, or impractical. By leveraging the advantages of simulation, Sim2Real techniques enable the rapid development, refinement, and evaluation of ML models, offering a more cost-effective and flexible solution.

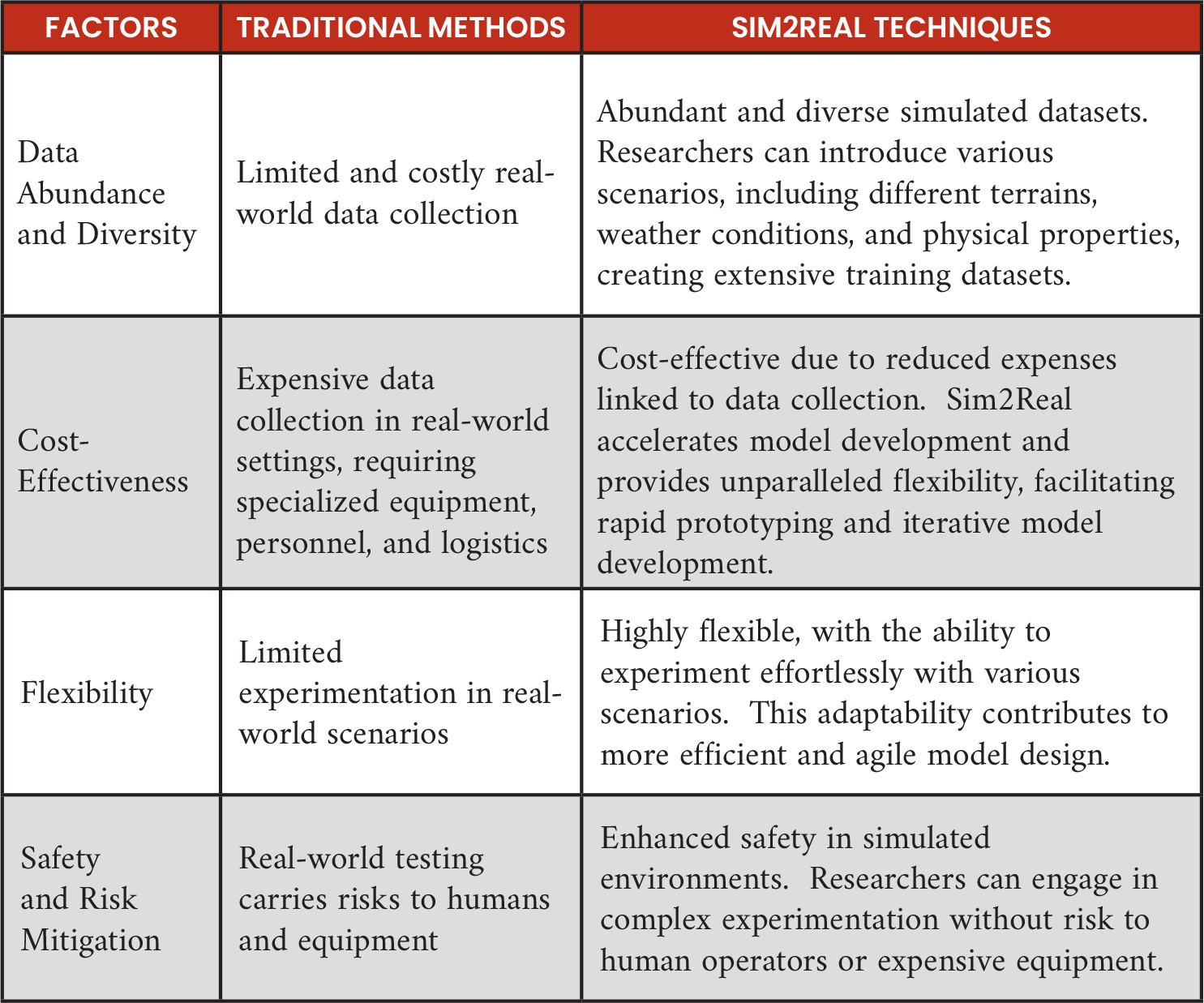

The adoption of Sim2Real techniques is rooted in addressing the limitations and challenges associated with real-world model training. These challenges can be broadly grouped into the following key areas, as defined in references 2–7 and highlighted in Table 1.

Table 1. Comparative Analysis of Traditional Model Training vs. Sim2Real Techniques

Data Abundance and Diversity

Real-world data collection often falls short in providing diverse and ample datasets. This limitation can hinder the training of ML models, particularly in tasks requiring robust generalization. In Sim2Real, simulations serve as a solution. These environments offer a vast and diverse source of data, creating extensive training datasets [2, 3]. Within these digital realms, researchers have the capacity to introduce an expansive array of scenarios, from varied terrains to different weather conditions and physical properties [5]. The diversity inherent in simulations empowers models to generalize effectively, adapting seamlessly to a wide spectrum of real-world scenarios [7].

Cost-Effectiveness and Flexibility

The cost of data collection in real-world settings can be a significant barrier, particularly in domains such as autonomous vehicles. Traditional methods may not only be expensive but also less flexible when it comes to experimenting with different scenarios [4, 7]. Sim2Real, in this context, emerges as a transformative solution. This approach substantially reduces expenses linked to data collection, making it a crucial factor for industries where cost efficiency is imperative. Sim2Real’s cost-effective nature accelerates model development and, more importantly, provides unparalleled flexibility. Researchers can effortlessly experiment with various scenarios, facilitating rapid prototyping and iterative model development [5]. This adaptability contributes to more efficient and agile model design.

Safety and Risk Mitigation

Safety is a paramount concern in high-stakes domains like healthcare, aerospace, and disaster response. Real-world testing in these areas can carry significant risks to human operators and valuable equipment [6]. Simulated environments emerge as the safer alternative [2–4]. Within these controlled digital realms, the safety of both human operators and valuable assets is a top priority. Sim2Real effectively mitigates the risks associated with real-world testing. Researchers can engage in complex experimentation without peril, confident that the simulated environment poses no danger to human operators or expensive equipment. This enhanced safety is pivotal, particularly in domains where failure is not an option.

Key Methodologies

Sim2Real encompasses a range of methodologies, each tailored to specific applications and domains. A fundamental aspect in this pursuit is achieving high-fidelity simulations that closely mimic real-world conditions. To narrow the gap between the data distributions of simulated environments and actual real-world scenarios, several techniques have emerged as key contributors.

Domain randomization, a widely used technique in the realm of ML and Sim2Real transfer, plays a crucial role in enhancing the adaptability and robustness of models [8, 9]. This technique involves training models across a variety of simulated environments, each distinguished by randomized characteristics. The objective is to instill ML models with the capability to effectively manage uncertainty and adapt to unforeseen variations, commonly encountered in real-world settings.

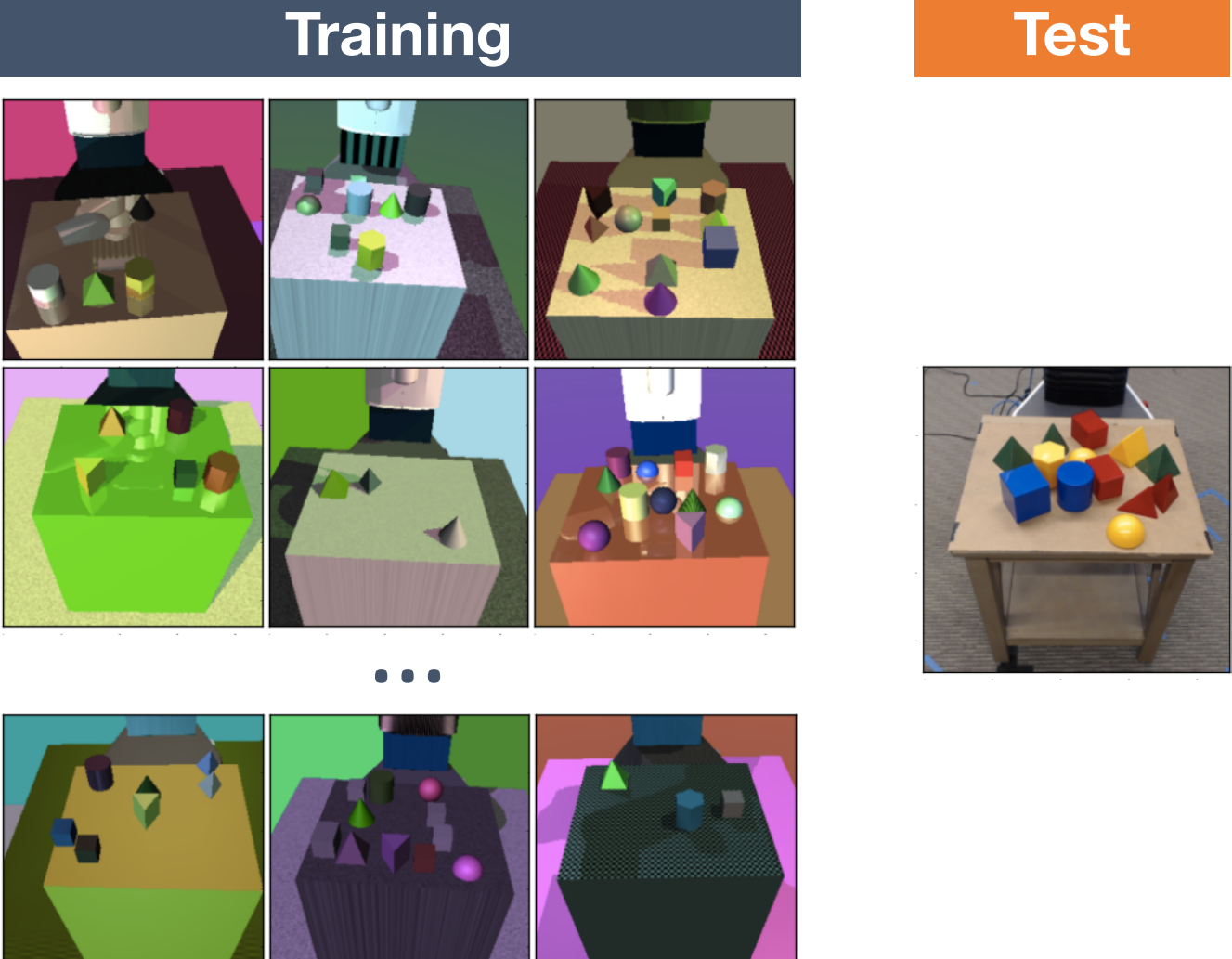

Numerous studies have explored the effectiveness of Sim2Real transfer methods based on domain randomization [10–14]. These studies have demonstrated the potential of domain randomization in creating a diverse and extensive training dataset. Figure 1, which illustrates several variations of low-fidelity training images with random camera positions, lighting conditions, object positions, and nonrealistic textures, showcases the application of domain randomization in generating a robust training set. This diversity enables models to excel in making accurate predictions when confronted with the intricacies of real-world environments, even when faced with previously unseen conditions during training.

Figure 1. Variations of Low-Fidelity Training Images for Domain Randomization (Source: Tobin et al. [10]).

Adversarial training, a widely adopted technique in deep learning (DL), focuses on enhancing the robustness and security of ML models [15–18]. It introduces adversarial examples during training, which, while often imperceptible to humans, perturb input data to deliberately induce incorrect predictions from the model. Including adversarial examples in the training data renders the model less susceptible to manipulation and significantly improves its performance in terms of robustness in the presence of noise and adversarial inputs.

Adversarial training plays a pivotal role in addressing the Sim2Real transfer problem, where models trained in the controlled environments of simulations are required to perform seamlessly in unpredictable real-world conditions [19–22]. Recent research underlines the critical role of adversarial training in minimizing domain discrepancies and enhancing model adaptability. By diminishing the gap between the realm of simulation and that of reality, this technique offers substantial value across a wide array of applications, ranging from robotics to autonomous systems.

Current Applications of Sim2Real

Sim2Real techniques represent a significant innovation within the ML domain. Their transformative potential has been most prominently realized in robotics and autonomous driving [3], where they have been rigorously tested and refined. In this section, the concrete applications of Sim2Real within these domains are explored, shedding light on their impact and efficacy in addressing real-world challenges.

The core applications of Sim2Real in robotics are examined first, where simulated environments prove to be heavily effective for training and optimizing intelligent systems. Sim2Real’s ability to bridge the gap between simulation and reality has empowered robots to interact seamlessly with their surroundings, enabling the navigation of complex terrains [23–26] and object detection, recognition, and manipulation with precision [11, 27–30].

Similarly, in the domain of autonomous driving, Sim2Real techniques have played a pivotal role in enhancing vehicle autonomy and safety. By leveraging simulated environments, autonomous vehicles have undergone extensive training, enabling them to navigate diverse road conditions [31–35] and respond to complex scenarios [35–38].

As the applications of Sim2Real in these well-established domains are traversed, the broader horizons are explored, where these techniques have the potential to reshape and revolutionize ML applications across various fields, including the cyber domain.

Applications of Sim2Real in Robotics

Sim2Real techniques have made significant strides in the realm of robotics, reshaping the landscape of intelligent systems’ capabilities and adaptability. These approaches seamlessly bridge the gap between simulated environments and real-world applications, equipping robots with a diverse range of capabilities.

Navigation of Complex Terrains

In the quest to navigate intricate and challenging terrains, simulated environments have become crucial training grounds. Deep reinforcement learning (DRL) plays a pivotal role in these advancements, enabling robots to adapt and excel in real-world but simulated scenarios.

As introduced in Hu et al. [23], a novel Sim2Real pipeline empowers mobile robots to navigate three-dimensional (3-D) rough terrains. The pipeline not only facilitates successful point-to-point navigation but also outperforms classical and other DL-based approaches in terms of success rate, cumulative travel distance, and time. Comprehensive surveys, such as the one in Zhao, Queralta, and Westerlund [24], shed light on the challenges of Sim2Real transfer in DRL, categorizing various approaches aimed at closing the gap between simulated and real-world performance, especially in navigation.

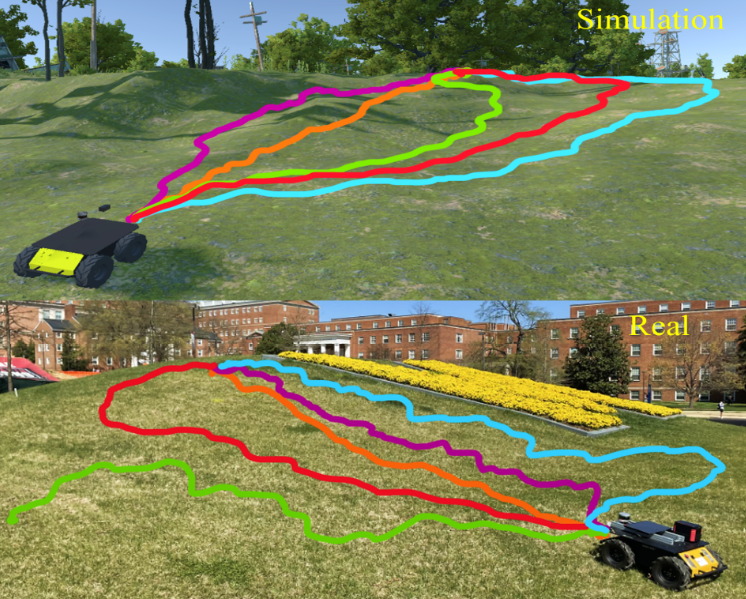

Moreover, Figure 2 demonstrates a hybrid architecture [25] that effectively employs attention-based DRL for navigation cost map generation in outdoor environments. This approach addresses the challenge of obstacle avoidance in complex terrains, as discussed in Zhang et al. [26]. By following least-cost waypoints on the cost map, the robot significantly enhances its performance in uneven outdoor terrains. These examples highlight the versatility of Sim2Real techniques in addressing complex terrains, making robots more adaptable and efficient in navigating challenging landscapes.

Figure 2. Comparison of Navigation Methods on Uneven Outdoor Terrains (Source: Weerakoon et al. [25]).

Object Detection, Recognition, and Manipulation

Precision in object detection, recognition, and manipulation is a hallmark of advanced robotics. Researchers in Ho et al. [27] introduced innovative techniques such as RetinaGAN to address the challenge of collecting real-world data for training DRL and imitation learning ML models. This generative adversarial network approach adapts simulated images to resemble real-world scenes with object-detection consistency. The result is a substantial improvement in the performance of reinforcement learning-based tasks like object instance grasping, pushing, and even the more complex task of door opening.

Sim2Real techniques have also been instrumental in object detection, with domain randomization serving as a key method, as highlighted in Horváth et al. [11]. By generating labeled synthetic datasets at scale, Sim2Real transfer learning ensures that state-of-the-art convolutional neural networks, such as YOLOv4, can achieve impressive mean average precision scores in scenarios where labeled real-world data may be scarce. Furthermore, in the industrial sector, Sim2Real techniques enable fast and accurate object recognition and localization for robotic bin picking, as detailed in Li et al. [28]. Supported by automated synthetic data generation pipelines, these methods not only provide precise training data but also excel in scenarios involving textureless, metallic, and occluded objects.

The application of Sim2Real extends to 3-D object detection from point clouds, a domain known for its challenges, as illustrated in DeBortoli et al. [29]. By leveraging adaptive sampling modules and 3-D adversarial training architectures, Sim2Real approaches enhance the consistency of features extracted from point clouds, improving 3-D object detection performance.

Even deformable objects like cloth are not beyond the reach of Sim2Real methods. Deformable object manipulation is a relatively unexplored frontier, with a notable data shortfall. In Matas et al. [30], agents are trained entirely in simulation, using domain randomization to ensure their versatility. They are then successfully deployed in the real world without prior exposure to real deformable objects.

In summary, Sim2Real techniques have evolved into indispensable tools, empowering robots to navigate complex terrains and execute precise object detection, recognition, and manipulation tasks. These advancements have created an era of highly adaptable and capable robotic systems, setting the stage for a new wave of innovations in intelligent robotics.

As Sim2Real in autonomous driving is explored, the notion that Sim2Real techniques can extend not only within the boundaries of robotics but into the broader space of complex real-world challenges is proposed.

Applications of Sim2Real for Autonomous Driving

Autonomous driving is a complex field that demands intelligent vehicles capable of navigating diverse road conditions and responding to complex scenarios. Integrating Sim2Real techniques has played a pivotal role in enhancing vehicle autonomy and safety by bridging the gap between simulated training environments and real-world deployment. How Sim2Real techniques enable autonomous vehicles to excel in challenging conditions is explored next.

Navigating Diverse Road Conditions

In the realm of autonomous driving, it is imperative that vehicles can navigate diverse road conditions, from off-road terrains to urban environments. Sim2Real techniques offer innovative solutions to train and deploy autonomous vehicles effectively.

A human-guided RL framework is introduced in Wu et al. [31], enhancing the learning process and capabilities of RL methods. This approach allows humans to intervene in the control progress, providing demonstrations as needed. The result is a versatile RL agent trained in simulation and effectively transferred to real-world unmanned ground vehicles, demonstrating robust navigation in dynamic and diverse environments.

To further bridge the visual reality gap for off-road autonomous driving, So et al. [32] introduce Sim2Seg, a novel approach that translates randomized simulation images into simulated segmentation and depth maps, enabling the direct deployment of an end-to-end RL policy in real-world scenarios. Sim2Seg effectively narrows the gap between the simulated training environment and real-world driving, particularly in off-road conditions.

An approach that combines the advantages of modular architectures and end-to-end DL for autonomous driving is presented by Müller et. al [33]. By encapsulating the driving policy, it successfully transfers policies trained in simulation to real-world deployments, addressing the challenges of adapting to diverse road conditions.

Mapless navigation is a crucial aspect of autonomous driving, and Wang et al. [34] propose a DRL-based approach for unmanned surface vehicles. This method carefully designs observation and action spaces and rewards functions and neural networks for navigation policies. By employing domain randomization and adaptive curriculum learning, it offers an effective solution to the Sim2Real transfer challenge and slow convergence associated with DRL.

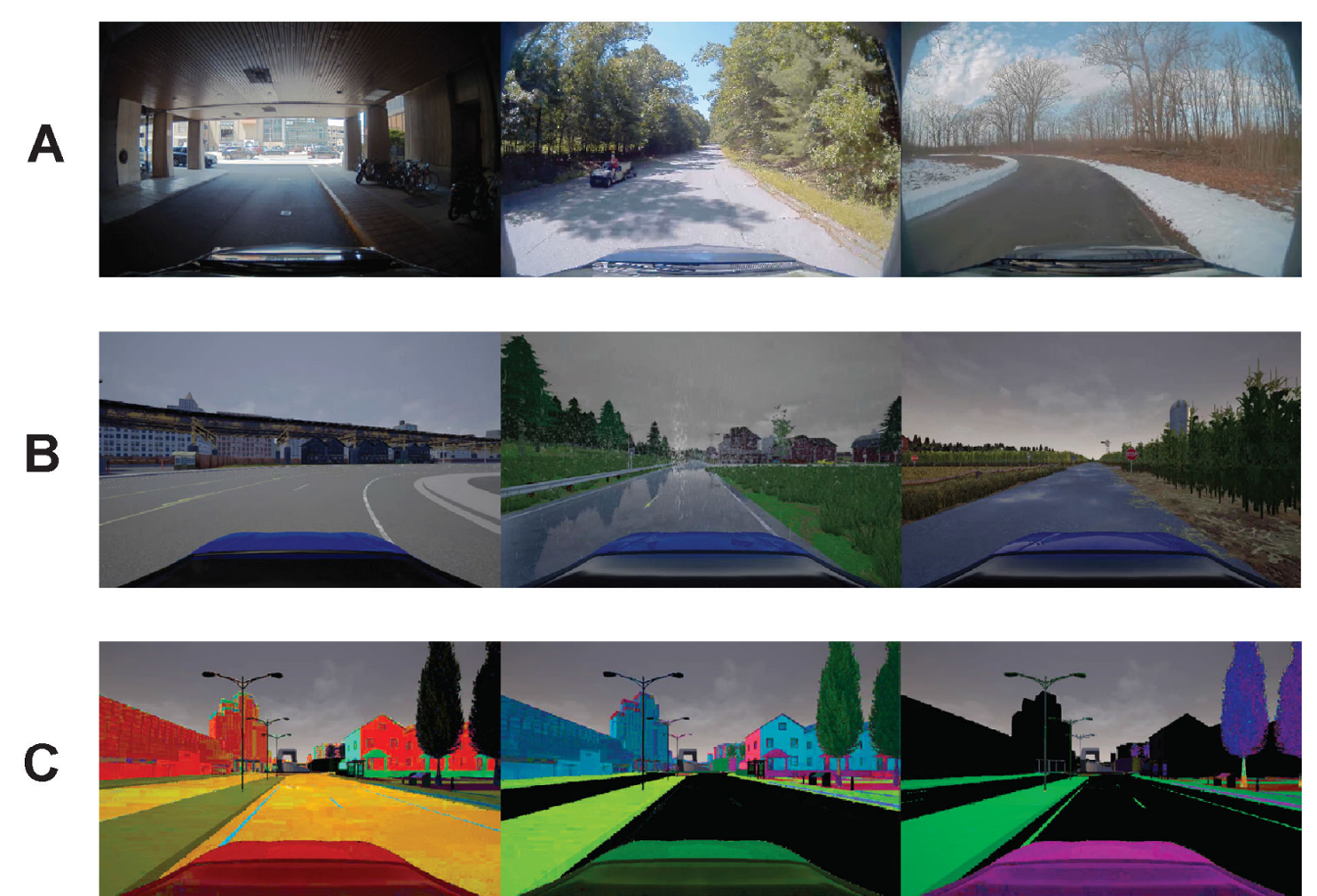

A data-driven simulation and training engine, which allows autonomous vehicles to learn end-to-end control policies through sparse rewards, is discussed in Amini et al. [35]. As illustrated in Figure 3, training images from several comparison methods used in experimentation highlight the diversity of environments and conditions encountered during training. This simulation enables vehicles to navigate a continuum of new local trajectories in diverse road conditions, proving the feasibility of transferring policies from simulation to real-world deployment. It also helps transition into the discussion on Sim2Real’s ability to help with the response to complex scenarios.

Figure 3. Training Images From (A) Real-World Samples and (B–C) Simulated Environments (Source: Amini et al. [35]).

Responding to Complex Scenarios

Autonomous vehicles must not only navigate diverse road conditions but also respond effectively to complex scenarios, including near-crash situations and challenging traffic interactions. How Sim2Real techniques equip these vehicles to tackle intricate real-world challenges is analyzed.

In the context of responding to complex scenarios, Amini et al. [35] present a data-driven simulation and training engine that learns end-to-end autonomous vehicle control policies using sparse rewards. By rendering novel training data derived from real-world trajectories, the simulator allows virtual agents to navigate previously unseen real-world roads, even in near-crash scenarios. This approach demonstrates the potential of Sim2Real techniques to create policies that can handle complex and novel situations.

A method that transfers a vision-based lane following driving policy from simulation to real-world operation on rural roads without any real-world labels is introduced in Bewley et al. [36]. Leveraging image-to-image translation, a single-camera control policy is learned while achieving domain transfer. This approach successfully operates autonomous vehicles in rural and urban environments, illustrating the applicability of Sim2Real for complex real-world scenarios.

Unsupervised domain adaptation methods have been developed for lane detection and classification in autonomous driving, as described in Hu et al. [37]. By using synthetic data generated in simulation, these methods leverage adversarial discriminative and generative techniques to adapt to the real world. They demonstrate superiority in detection and classification accuracy and consistency in complex traffic scenarios.

Complex multivehicle and multilane scenarios are particularly challenging for autonomous vehicles. A Sim2Real approach to safely learn driving policies for autonomous vehicles sharing the road with other vehicles and obstacles is discussed in Mitchell et al. [38]. This approach leverages mixed reality setups to simulate collisions and interactions, making the learning process safer. After only a few runs in mixed reality, collisions are significantly reduced, indicating the approach’s effectiveness in addressing complex traffic scenarios.

Sim2Real’s influence within the autonomous driving domain has transcended conventional boundaries, echoing its success in robotics. These techniques have introduced advancements in self-driving technology, enhancing not only the capabilities but also the safety of autonomous vehicles.

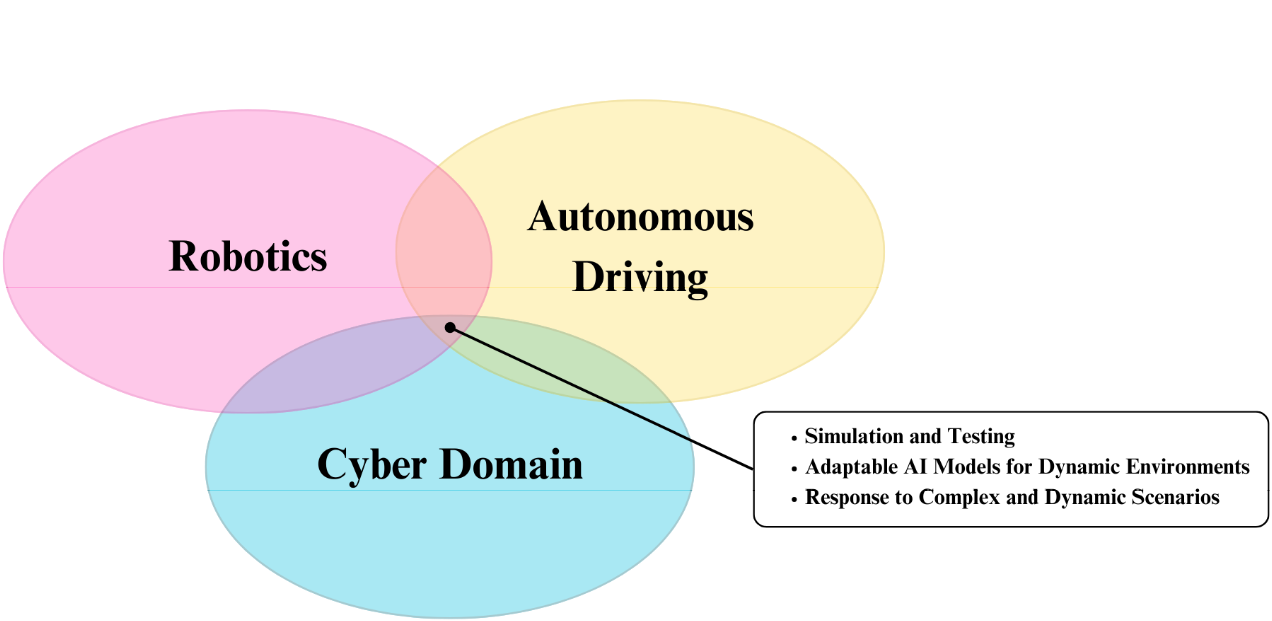

As the potential applications of Sim2Real within the cyber domain are explored next, transforming Sim2Real is far from over. Figure 4 portrays the intricate overlap between these existing and potential applications, offering a nuanced perspective on the transformative potential that Sim2Real introduces across diverse domains. Just as it has reshaped the realms of robotics and autonomous driving, Sim2Real now holds the promise of innovative solutions and novel perspectives to address the multifaceted complexities of the cyber domain.

Figure 4. Visualizing the Overlap Between Existing and Potential Applications of Sim2Real (Source: E. Nack).

Potential Applications of Sim2Real in the Cyber Domain

In the cyber domain, advancement is not merely a desire but a necessity. However, when it comes to applying Sim2Real techniques, a noticeable void remains. Unlike its well-established presence in robotics and autonomous driving, Sim2Real remains largely unexplored within the cyber domain. This limitation can be attributed to the nascent application of ML to cybersecurity and the constrained availability of advanced cyber simulators and emulators.

This absence prompts a pivotal question: What can the cyber domain gain from Sim2Real techniques? The answer lies in the intrinsic nature of cybersecurity—a realm where the stakes are high and the consequences of failure can be catastrophic. In an era where cyber threats perpetually evolve, organizations require dynamic, adaptable, and data-rich environments for training, testing, and fortifying their defenses. Sim2Real holds the potential to bridge this gap.

The opportunities that this fusion of simulation and reality can unlock, from reshaping cybersecurity training and testing to facilitating meticulous vulnerability assessments, are discussed next. As exploration begins, Sim2Real’s potential is offered—an opportunity to redefine the landscape of the cyber domain in a way that is not only innovative but indispensable.

Cybersecurity Training and Testing

Various approaches have been employed in cybersecurity training and testing to equip professionals with the necessary skills and experience. Traditional classroom-style training provides theoretical instruction to individuals entering cybersecurity [39, 40]. However, this approach often falls short in replicating the real-world dynamics and pressures associated with cyber threats.

To enhance engagement and provide a more immersive experience, the industry has turned to gamification. Several training platforms incorporate gamified scenarios, allowing participants to navigate simulated cyber threats interactively [41, 42]. “Capture The Flag” competitions are a compelling example of gamification within cybersecurity, providing a platform for participants to solve security-related puzzles and challenges [43]. Although widely recognized for its positive impact on user engagement and skill development, gamification often focuses on specific elements of cybersecurity, providing expertise in targeted areas but not offering a comprehensive training method for the diverse and rapidly evolving cyber threat landscape.

These current practices, while contributing significantly to cybersecurity training and testing, face inherent challenges. The limitations of predefined scenarios, the static nature of exercises, and the finite set of challenges in gamified platforms all point to the need for dynamic and adaptable training environments capable of replicating the intricacies of real-world cyber threats.

Recognizing the limitations in current practices, the integration of Sim2Real techniques becomes a natural progression. While existing simulations provide valuable training and testing environments [39, 44, 45], the critical aspect of seamlessly transitioning from simulation to reality remains underexplored. The literature on Sim2Real techniques in cybersecurity is limited, particularly concerning the effectiveness of applying knowledge gained in simulated environments to real-world cybersecurity scenarios.

In this context, Cyber Virtual Assured Network (CyberVAN) and Cyber Battlefield Operating System Simulation (CyberBOSS) emerge as valuable simulation environments that address the existing gaps in cybersecurity training and testing. CyberVAN functions as a discrete event simulator, offering a quick and flexible setup of high-fidelity cybersecurity scenarios [46]. This simulation environment provides a dynamic and realistic platform for training and testing, enabling the instantiation of custom high-fidelity networks. Its capability aligns seamlessly with the customization potential of Sim2Real environments to mirror specific network architectures, industry sectors, or regulatory compliance requirements, enhancing the overall adaptability of the training environment. Furthermore, as Sim2Real techniques aim to open doors to dynamic and realistic cyber environments, CyberVAN contributes by replicating actual cyber threats and scenarios in controlled settings. Serving as crucial training grounds, these simulations empower cybersecurity professionals to refine their detection, mitigation, and response strategies effectively.

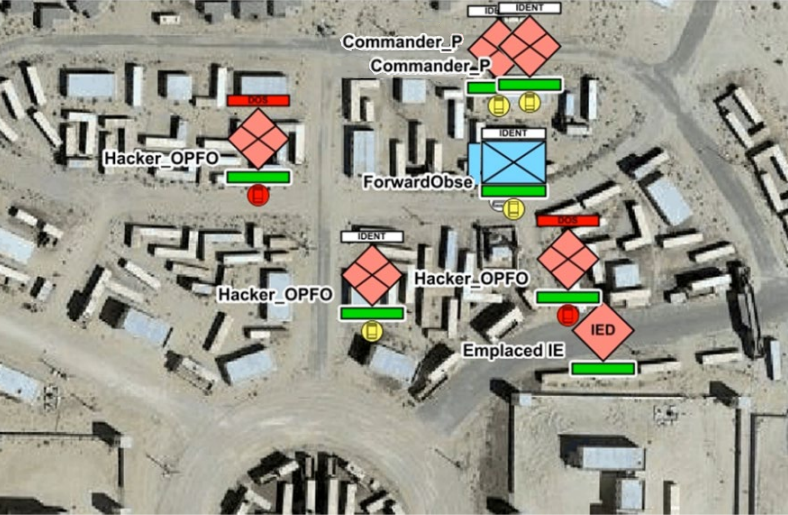

In addition to CyberVAN, CyberBOSS introduces a framework designed to model cyberspace effects and operations [47]. This framework operates across federated live, virtual, constructive, and gaming (LVC&G) systems, offering a comprehensive solution for cyberspace modeling in environments that may lack these capabilities. CyberBOSS stands as a strategic asset, particularly in scenarios where native cyberspace modeling is limited or nonexistent. Figure 5 visualizes an example of cyberspace-related objects and effects within the STTC’s Battlespace Visualization and Interaction tool using information provided by the CyberBOSS federation. Integrating these advanced simulation tools, combined with Sim2Real techniques, enhances the potential of bridging the gap between simulation training scenarios and the complex realities of cybersecurity, ensuring that professionals remain agile and well-prepared against emerging threats.

Figure 5. Visualizing Cyberspace-Related Objects and Effects Using CyberBOSS (Source: Hasan et al. [47]).

In conclusion, the current research gap in Sim2Real within cybersecurity necessitates further exploration and investigation into the seamless transition from simulated cybersecurity environments to real-world applications; however, integrating advanced simulation tools like CyberVAN and CyberBOSS stands as a promising avenue for addressing this gap and revolutionizing cybersecurity training and testing.

Vulnerability Testing

Vulnerability testing stands as a cornerstone of effective cybersecurity, playing a pivotal role in the timely identification and mitigation of potential threats to an organization’s digital assets. While established methods like vulnerability assessment and penetration testing have made significant contributions to this process, inherent limitations prompt a reevaluation of these existing approaches.

Vulnerability assessment stands as a passive and proactive strategy, employing tools like Nessus [48] and OpenVAS [49] to systematically uncover known vulnerabilities in network configurations and software systems. A comparative study of these tools highlights their features and effectiveness in vulnerability assessment [50]. However, as automation proliferates, organizations grapple with copious data, including false positives. Challenges also extend to identifying logical attack vectors, such as application logic flaws and password reuse, often resulting in generic remediation recommendations. The rigidity of automated tools may also lead to oversight in emerging threats or vulnerabilities not present in their databases [51]. This limitation hampers effectiveness in adapting to the dynamically evolving threat landscapes.

On the other hand, penetration testing embraces an active and systematic approach to security assessment. Ethical hacking simulates real-world cyber attacks, providing profound insights into the impact of identified vulnerabilities on the information system [52]. This exploration considers mitigating controls and allows for a comprehensive evaluation of the security landscape, eliminating false positives. Yet, penetration testing demands considerable time and effort, potentially requiring external engagement for comprehensive testing. Its outcomes may not guarantee identifying every vulnerability, and it might not provide insights into emerging vulnerabilities post assessment [51].

Confronting these challenges embedded in traditional vulnerability assessment and penetration testing, Sim2Real techniques emerge as a transformative solution. By allowing testing and assessment to take place off the network, Sim2Real provides a controlled and secure environment for organizations to simulate various cyber attacks and exploitation techniques, gaining insights before deployment into the live network. This proactive approach empowers organizations to identify and address vulnerabilities before they can be exploited by malicious actors.

Furthermore, Sim2Real assessments extend the scope of vulnerability testing beyond traditional methods, accommodating complex infrastructures, including cloud-based systems, Internet of Things (IoT) devices, and critical infrastructure networks—all within a secure and controlled context.

In essence, Sim2Real techniques offer a unique set of advantages, enabling organizations to map and analyze more complex scenarios before deployment and ultimately enhancing their cybersecurity posture.

Training AI for Cybersecurity

Artificial intelligence (AI) is rapidly evolving in modern cybersecurity, empowering threat detection, anomaly detection, predictive analysis, and more. However, training AI models for cybersecurity applications demands large amounts of diverse and high-quality data [53]. Currently, researchers rely on popular datasets such as ACI-IoT-2023 [54], KDD’99 Cup [55], UNSW-NB15 [56], CIC-IDS2017 [57], CIC-DDoS2019 [58], CERT [59, 60], and Bot-IoT [61]. These datasets encompass a range of cyber threats, providing a foundation for training AI models to recognize and respond to various attack vectors, vulnerabilities, and malicious activities.

However, building comprehensive datasets for AI training in cybersecurity poses significant challenges. Acquiring diverse and realistic data often requires a well-equipped cybersecurity lab setup, a multitude of devices, and precise data collection methods. Constructing such datasets is not only resource-intensive but can also be challenging due to the dynamic nature of cyber threats. The complexities involved in replicating real-world cyber scenarios highlight the limitations of current approaches in providing sufficiently diverse and adaptive datasets for effective AI model training.

Sim2Real techniques provide a compelling and more effective solution for training AI models by creating data-rich environments that accurately mimic the complexities of actual cyber landscapes. This novel approach addresses the limitations of existing training practices, offering a dynamic and realistic environment for AI models to learn and adapt to the ever-evolving landscape of cyber threats.

Within Sim2Real-based cyber environments, AI models can be exposed to a vast array of realistic cyber threats and scenarios. These environments generate diverse and dynamic datasets that encompass various attack vectors, malware samples, and network traffic patterns. AI models can learn from these simulated encounters, improving their ability to detect and respond to real-world cyber threats effectively.

Moreover, Sim2Real allows for the injection of controlled anomalies and variations into the data, enabling AI models to develop robust anomaly detection capabilities. AI models trained in these environments become highly adaptable, as they are exposed to a broad spectrum of cyber scenarios from routine network traffic to sophisticated zero-day attacks.

The implications of training AI for cybersecurity within Sim2Real environments extend to predictive analysis and threat intelligence. AI models can learn to recognize patterns indicative of emerging threats, enhancing an organization’s ability to proactively respond to evolving cyber risks.

Challenges and Limitations

Despite the promising potential of Sim2Real techniques in the cyber domain, their implementation faces certain challenges and limitations that should be considered.

Simulations, while valuable, often encounter a significant hurdle known as the “Simulation to Reality Gap” [62, 63]. These simulations can replicate real-world scenarios to a certain extent, but they may not fully capture all complexities and the unpredictable nature. This inherent discrepancy could potentially impact the effectiveness of Sim2Real techniques in preparing for and responding to real-world cyber threats.

Data privacy and security are significant concerns in any application that involves extensive data [64]. While simulations often demand extensive data for efficacy, incorporating real-world data into simulations introduces potential privacy risks, especially within the cyber domain where sensitive information is often involved. Striking a delicate balance between ensuring data privacy and maintaining simulation effectiveness becomes crucial in this context.

Furthermore, the quantity and quality of data are critical considerations in Sim2Real techniques. Insufficient or inaccurate data can impede the fidelity of simulations, hindering their effectiveness in modeling real-world scenarios. Recognizing and addressing these challenges is essential in enhancing the accuracy and reliability of these techniques.

A fundamental challenge lies in validating Sim2Real techniques within the cyber domain. Given the relatively unexplored nature of Sim2Real in this domain, the absence of established metrics or benchmarks hampers evaluating the success of these techniques. For instance, without standardized measures, it becomes challenging to assess how well a model trained in a simulated environment would perform when facing real-world cyber threats.

Despite these challenges, recognizing the potential benefits of Sim2Real techniques in the cyber domain is crucial. Continued research and development in this field hold the promise of revolutionizing cybersecurity training and testing, offering a dynamic and adaptable solution to the ever-evolving landscape of cyber threats. However, it is essential to approach this potential with a clear understanding of the challenges and limitations, ensuring realistic expectations and effective strategies for overcoming these obstacles.

Conclusions

The convergence of Sim2Real techniques with the cyber domain represents a promising frontier in cybersecurity and ML. This article delved into the foundational concepts of Sim2Real, explored its current applications in robotics and autonomous driving, and examined its potential applications, challenges, and limitations within the cyber domain.

While Sim2Real techniques have already demonstrated their value in training robots and autonomous vehicles, their application within the cyber domain holds the potential to revolutionize how organizations prepare for, defend against, and respond to cyber threats. Creating dynamic and data-rich cyber environments for training, testing, vulnerability assessment, and AI model training offers a new paradigm for enhancing cybersecurity resilience.

Despite the immense promise, integrating Sim2Real techniques into the cyber domain is not without its challenges. However, these challenges present compelling research opportunities for the future of Sim2Real in the cyber domain. Exploring the seamless integration of Sim2Real techniques with real-world cybersecurity scenarios, optimizing adaptability in the face of evolving threats, establishing standardized metrics for assessment, and refining the transferability of simulated knowledge to practical applications are just a few examples of the exciting avenues for future exploration.

In the future, Sim2Real gaps will be evaluated by training an ML-based Network Intrusion Detection System classifier in CyberVAN. Subsequently, the objective is to transfer this classifier to the IoT Research Lab at the Army Cyber Institute to assess its effectiveness and explore ways to improve performance. Such experimentation will contribute significantly to understanding the challenges and potentials of Sim2Real techniques in real-world cyber applications.

By embracing these techniques and addressing their challenges, the cyber domain can become more adaptive, resilient, and prepared to safeguard the digital ecosystems that underpin modern society. Continued research and development will be key to unlocking the full potential of Sim2Real in enhancing cyber resilience and redefining the landscape of cybersecurity practices.

References

- Hu, X., et al. “How Simulation Helps Autonomous Driving: A Survey of Sim2real, Digital Twins, and Parallel Intelligence.” IEEE Transactions on Intelligent Vehicles, 2023.

- Höfer, S., et al. “Perspectives on Sim2real Transfer for Robotics: A Summary of the R: Ss 2020 Workshop.” arXiv preprint arXiv:2012.03806, 2020.

- Höfer, S., et al. “Sim2Real in Robotics and Automation: Applications and Challenges.” IEEE Transactions on Automation Science and Engineering, vol. 18.2, pp. 398–400, 2021.

- Salvato, E., et al. “Crossing the Reality Gap: A Survey on Sim-To-Real Transferability of Robot Controllers in Reinforcement Learning.” IEEE Access, vol. 9, pp. 153171–153187, 2021.

- Chebotar, Y., et al. “Closing the Sim-to-Real Loop: Adapting Simulation Randomization With Real World Experience.” The 2019 International Conference on Robotics and Automation (ICRA), IEEE, 2019.

- Tan, J., et al. “Sim-to-Real: Learning Agile Locomotion for Quadruped Robots.” arXiv preprint arXiv:1804.10332, 2018.

- James, S., et al. “Sim-to-Real via Sim-to-Sim: Data-Efficient Robotic Grasping via Randomized-to-Canonical Adaptation Networks.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019.

- Chen, X., et al. “Understanding Domain Randomization for Sim-to-Real Transfer.” arXiv preprint arXiv:2110.03239, 2021.

- Muratore, F., et al. “Robot Learning From Randomized Simulations: A Review.” Frontiers in Robotics and AI, vol. 31, 2022.

- Tobin, J., et al. “Domain Randomization for Transferring Deep Neural Networks From Simulation to the Real World.” The 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, 2017.

- Horváth, D., et al. “Object Detection Using Sim2real Domain Randomization for Robotic Applications.” IEEE Transactions on Robotics, vol. 39.2, pp. 1225–1243, 2022.

- Marez, D., S. Borden, and L. Nans. “UAV Detection With a Dataset Augmented by Domain Randomization.” Geospatial Informatics X, vol. 11398, SPIE, 2020.

- Ranaweera, M., and Q. Mahmoud. “Evaluation of Techniques for Sim2Real Reinforcement Learning.” The International FLAIRS Conference Proceedings, vol. 36, 2023.

- Akkaya, I., et al. “Solving Rubik’s Cube With a Robot Hand.” arXiv preprint arXiv:1910.07113, 2019.

- Kurakin, A., I. Goodfellow, and S. Bengio. “Adversarial Machine Learning at Scale.” The 5th International Conference on Learning Representations, ICLR 2017 – Conference Track Proceedings, arXiv preprint arXiv:1611.01236, November 2016.

- Huang, J., H. J. Choi, and N. Figueroa. “Trade-Off Between Robustness and Rewards Adversarial Training for Deep Reinforcement Learning Under Large Perturbations.” IEEE Robotics and Automation Letters, 2023.

- Shrivastava, A., et al. “Learning From Simulated and Unsupervised Images Through Adversarial Training.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017.

- Tramèr, F., A. Kurakin, N. Papernot, I. Goodfellow, D. Boneh, and P. McDaniel. “Ensemble Adversarial Training: Attacks and Defenses.” In the International Conference on Learning Representations (ICLR), arXiv preprint arXiv:1705.07204, https://arxiv.org/abs/1705.07204, 2017.

- Rezaeianaran, F., et al. “Seeking Similarities over Differences: Similarity-Based Domain Alignment for Adaptive Object Detection.” Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021.

- Zhai, P., et al. “Robust Adaptive Ensemble Adversary Reinforcement Learning.” IEEE Robotics and Automation Letters, vol. 7.4, pp. 12562–12568, 2022.

- Shin, I., K. Park, S. Woo, and I. S. Kweon. “Unsupervised Domain Adaptation for Video Semantic Segmentation.” In the AAAI Conference on Artificial Intelligence, arXiv preprint arXiv:2107.11052, 2022.

- Cheng, K., C. Healey, and T. Wu. “Towards Adversarially Robust and Domain Generalizable Stereo Matching by Rethinking DNN Feature Backbones.” arXiv preprint arXiv:2108.00335, 2021.

- Hu, H., et al. “A Sim-to-Real Pipeline for Deep Reinforcement Learning for Autonomous Robot Navigation in Cluttered Rough Terrain.” IEEE Robotics and Automation Letters, vol. 6.4, pp. 6569–6576, 2021.

- Zhao, W., J. P. Queralta, and T. Westerlund. “Sim-to-Real Transfer in Deep Reinforcement Learning for Robotics: A Survey.” 2020 IEEE Symposium Series on Computational Intelligence (SSCI), IEEE, 2020.

- Weerakoon, K., A. J. Sathyamoorthy, and D. Manocha. “Sim-to-Real Strategy for Spatially Aware Robot Navigation in Uneven Outdoor Environments.” In the ICRA 2022 Workshop on Releasing Robots Into the Wild, arXiv preprint arXiv:2205.09194, 2022.

- Zhang, T., et al. “Sim2real Learning of Obstacle Avoidance for Robotic Manipulators in Uncertain Environments.” IEEE Robotics and Automation Letters, vol. 7.1, pp. 65–72, 2021.

- Ho, D., et al. “Retinagan: An Object-Aware Approach to Sim-to-Real Transfer.” The 2021 IEEE International Conference on Robotics and Automation (ICRA), IEEE, 2021.

- Li, X., et al. “A Sim-to-Real Object Recognition and Localization Framework for Industrial Robotic Bin Picking.” IEEE Robotics and Automation Letters, vol. 7.2, pp. 3961–3968, 2022.

- DeBortoli, R., et al. “Adversarial Training on Point Clouds for Sim-to-Real 3D Object Detection.” IEEE Robotics and Automation Letters 6.4, pp. 6662–6669, 2021.

- Matas, J., S. James, and A. J. Davison. “Sim-to-Real Reinforcement Learning for Deformable Object Manipulation.” Conference on Robot Learning, PMLR, 2018.

- Wu, J., et al. “Human-Guided Reinforcement Learning With Sim-to-Real Transfer for Autonomous Navigation.” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- So, J., A. Xie, S. Jung, J. Edlund, R. Thakker, A. Agha-Mohammadi, P. Abbeel, and S. James. “Sim-to-Real via Sim-to-Seg: End-to-End Off-Road Autonomous Driving Without Real Data.” Proceedings of the 6th Conference on Robot Learning, PMLR 205, pp. 1871–1881, arXiv preprint arXiv:2210.14721, 2022.

- Müller, M., A. Dosovitskiy, B. Ghanem, and V. Koltun. “Driving Policy Transfer via Modularity and Abstraction.” Proceedings of the 2nd Conference on Robot Learning in Proceedings of Machine Learning Research, vol. 87, pp. 1–15, https://proceedings.mlr.press/v87/mueller18a.html, arXiv preprint arXiv:1804.09364, 2018.

- Wang, N., et al. “Sim-to-Real: Mapless Navigation for USVs Using Deep Reinforcement Learning.” Journal of Marine Science and Engineering, vol. 10.7, p. 895, 2022.

- Amini, A., et al. “Learning Robust Control Policies for End-to-End Autonomous Driving From Data-Driven Simulation.” IEEE Robotics and Automation Letters, vol. 5.2, pp. 1143–1150, 2020.

- Bewley, A., et al. “Learning to Drive From Simulation Without Real World Labels.” The 2019 International Conference on Robotics and Automation (ICRA), IEEE, 2019.

- Hu, C., et al. “Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving.” The 2022 IEEE Intelligent Vehicles Symposium (IV), 2022.

- Mitchell, R., J. Fletcher, J. Panerati, and A. Prorok. “Multi-Vehicle Mixed Reality Reinforcement Learning for Autonomous Multi-Lane Driving.” In the Proceedings of the 19th International Conference on Autonomous Agents and Multiagent Systems, Auckland, New Zealand, pp. 1928–1930, arXiv preprint arXiv:1911.11699, 2020.

- Urias, V. E., et al. “Dynamic Cybersecurity Training Environments for an Evolving Cyber Workforce.” The 2017 IEEE International Symposium on Technologies for Homeland Security (HST), IEEE, 2017.

- Hatzivasilis, G., et al. “Modern Aspects of Cyber-Security Training and Continuous Adaptation of Programmes to Trainees.” Applied Sciences, vol. 10.16, p. 5702, 2020.

- Jin, G., et al. “Evaluation of Game-Based Learning in Cybersecurity Education for High School Students.” Journal of Education and Learning (EduLearn), vol. 12.1, pp. 150–158, 2018.

- Jin, G., et al. “Game Based Cybersecurity Training for High School Students.” Proceedings of the 49th ACM Technical Symposium on Computer Science Education, 2018.

- Švábenský, V., et al. “Enhancing Cybersecurity Skills by Creating Serious Games.” Proceedings of the 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education, 2018.

- Chowdhury, N., and V. Gkioulos. “Cyber Security Training for Critical Infrastructure Protection: A Literature Review.” Computer Science Review, vol. 40, p. 100361, 2021.

- Prümmer, J., T. van Steen, and B. van den Berg. “A Systematic Review of Current Cybersecurity Training Methods.” Computers & Security, p. 103585, 2023.

- Chadha, R., et al. “Cybervan: A Cyber Security Virtual Assured Network Testbed.” MILCOM 2016-IEEE Military Communications Conference, 2016.

- Hasan, O., J. Geddes, J. Welch, N. Vey, and R. Burch. “A Cyberspace Effects Server for LVC&G Training Systems.” I/ITSEC 2021 Conference (paper no. 21258), Orlando, FL, 2021.

- Tenable. “Nessus Vulnerability Scanner: Network Security Solution.” www.tenable.com/products/nessus, accessed 12 December 2023.

- OpenVAS – Open Vulnerability Assessment Scanner. www.openvas.org, accessed 12 December 2023.

- Yadav, S. K., D. S. Pandey, and S. Lade. “A Comparative Analysis of Detecting Vulnerability in Network Systems.” International Journal of Advanced Research in Computer Science and Software Engineering, vol. 7.5, 2017.

- Shinde, P. S., and S. B. Ardhapurkar. “Cyber Security Analysis Using Vulnerability Assessment and Penetration Testing.” The 2016 World Conference on Futuristic Trends in Research and Innovation for Social Welfare (Startup Conclave), IEEE, 2016.

- Yaqoob, I., et al. “Penetration Testing and Vulnerability Assessment.” Journal of Network Communications and Emerging Technologies (JNCET), vol. 7.8, www.jncet.org, 2017.

- Sarker, I. H., et al. “Cybersecurity Data Science: An Overview from Machine Learning Perspective.” Journal of Big Data, vol. 7, pp. 1–29, 2020.

- Bastian, N., D. Bierbrauer, M. McKenzie, and E. Nack. “ACI IoT Network Traffic Dataset 2023.” IEEE Dataport, https://dx.doi.org/10.21227/qacj-3×32, 29 December 2023.

- Tavallaee, M., et al. “A Detailed Analysis of the KDD CUP 99 Data Set.” The 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, 2009.

- Moustafa, N., and J. Slay. “UNSW-NB15: A Comprehensive Data Set for Network Intrusion Detection Systems (UNSW-NB15 Network Data Set).” The 2015 Military Communications and Information Systems Conference (MilCIS), IEEE, 2015.

- Sharafaldin, I., A. H. Lashkari, and A. A. Ghorbani. “Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization.” ICISSP 1, pp. 108-116, 2018.

- Sharafaldin, I., et al. “Developing Realistic Distributed Denial of Service (DDoS) Attack Dataset and Taxonomy.” The 2019 International Carnahan Conference on Security Technology (ICCST), IEEE, 2019.

- Lindauer, B., et al. “Generating Test Data for Insider Threat Detectors.” J. Wirel. Mob. Networks Ubiquitous Comput. Dependable Appl., vol. 5.2, pp. 80–94, 2014.

- Glasser, J., and B. Lindauer. “Bridging the Gap: A Pragmatic Approach to Generating Insider Threat Data.” The 2013 IEEE Security and Privacy Workshops, 2013.

- Koroniotis, N., et al. “Towards the Development of Realistic Botnet Dataset in the Internet of Things for Network Forensic Analytics: Bot-Iot Dataset.” Future Generation Computer Systems, vol. 100, pp. 779–796, 2019.

- Jakobi, N., P. Husbands, and I. Harvey. “Noise and the Reality Gap: The Use of Simulation in Evolutionary Robotics.” Advances in Artificial Life: Third European Conference on Artificial Life Granada, Spain, 4–6 June 1995.

- Koos, S., J.-B. Mouret, and S. Doncieux. “The Transferability Approach: Crossing the Reality Gap in Evolutionary Robotics.” IEEE Transactions on Evolutionary Computation, vol. 17.1, pp. 122–145, 2012.

- Tene, O., and J. Polonetsky. “Privacy in the Age of Big Data: A Time for Big Decisions.” Stan. L. Rev. Online, vol. 64, p. 63, 2011.

Biographies

Emily Nack is an information technology specialist and research lab manager at the U.S. Army Cyber Institute within the United States Military Academy (USMA) at West Point. Her research focuses on cyber modeling and simulation (M&S), augmented reality for tactical and operational data visualization, and artificial intelligence integration in M&S environments. Ms. Nack holds a B.S. degree in game design and development from the Rochester Institute of Technology and an A.S. degree in computer information systems from Hudson Valley Community College.

Nathaniel D. Bastian is a Lieutenant Colonel in the U.S. Army, where he is an academy professor and cyber warfare officer at Army Cyber Institute within the USMA at West Point and a program manager in the Information Innovation Office at the Defense Advanced Research Projects Agency. His research efforts aim to develop innovative, assured, intelligent, human-aware, data-centric, and decision-driven capabilities for multidomain operations. Dr. Bastian holds a B.S. in engineering management (electrical engineering) from USMA, an M.S. in econometrics and operations research from Maastricht University, and an M.Eng. in industrial engineering and Ph.D. in industrial engineering and operations research from Pennsylvania State University.