Introduction

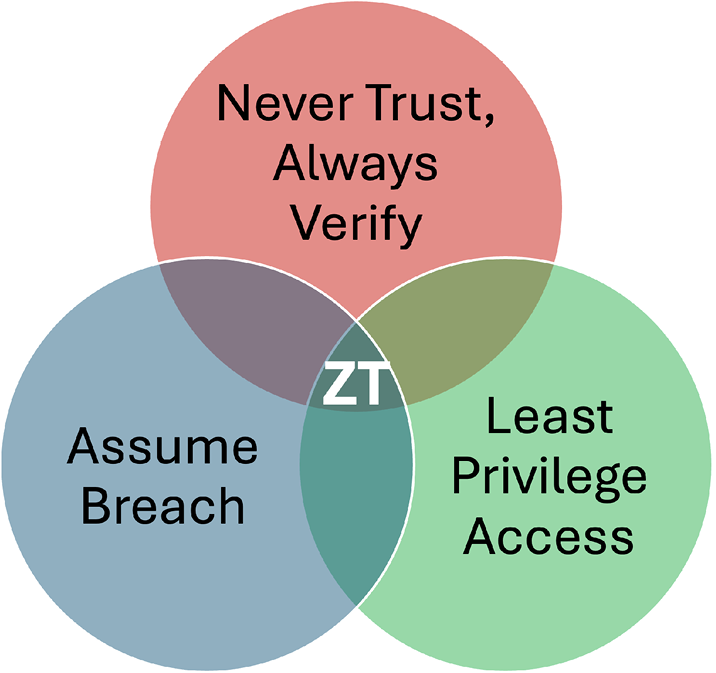

Zero Trust Architecture (ZTA) has become a mainstream information security philosophy. Many commercial enterprises are in varying stages of their journeys in adopting and implementing ZTA. Similarly, federal policy has moved toward ZTA, motivated by actions such as Executive Order 14028 and guided by NIST 800-207 and CISA’s Zero Trust Maturity model [1–3]. Although there are many frameworks for ZTA in industry, academia, and government, the essence of the philosophy can be summarized in a few brief maxims (Figure 1), such as the following:

- Never Trust, Always Verify

- Least Privilege Access

- Assume Breach

Figure 1. Key ZT Tenets (Source: D. Bhasker and C. Zarcone).

ZTA has spawned architectures and implementations that vary by organization, their respective technology stacks, security baselines, and appetites for risk [4]. As organizations implement this architecture, and as with any technology endeavor, there inevitably comes a point of diminishing returns. The following questions automatically arise from this: How much microsegmentation is enough? How continuous does our “continuous monitoring” need to be?

This article is a thought experiment where the authors explore a maximalist approach of the various ZTA philosophies to observe any trends that naturally determine the bookends of value generated by implementing ZTA.

Background

From its origins in a doctoral dissertation by Stephen Paul Marsh and the “de-perimeterisation” work of the Jericho Forum to Forrester Research and Google’s publication of its influential “BeyondCorp” series of white papers, ZTA has become mature by any architectural definition [5–8]. Many organizations are well on their way to ZTA, a process that typically spans multiple years and impacts almost every layer of the information technology stack. The process is often referred to as a “journey”—a recognition of the fact that for all but the smallest of organizations, migrating to ZTA will require sustained effort over a significant period.

Several ZTA and ZTA-compatible frameworks have proliferated over time. The differences between these frameworks often reflect the philosophies of its developers, the priorities of organizations for which they are intended, and any procedural or technical constraints. Still, they tend to share a common set of characteristics, including the following:

- De-emphasis of networks as trust factors

- the de-perimeterization of computer network boundaries

- enhanced emphasis on small, workload-specific perimeters (often called “microsegmentation”)

- Strong user and device authentication

- User and device policy compliance

- Continuous visibility and risk assessment of users and devices

All of this begs an interesting thought experiment—what happens if we extend the basic concepts of ZTA to its logical extremes? For example, how far do we go with de-perimeterization? Do we just eliminate perimeters altogether? How frequently do we authenticate our users—frequently to the point of continuously? How much compliance is enough? Do we allow a margin of lenience or mandate zero deviation from policy?

The Disappearance of the Edge

The information security community has long used firewalls and other perimeter controls to segregate networks of different trust levels. But if one takes a maximalist view, ZTA suggests that all computer networks should be equally untrusted. From that perspective, it does not make sense to segregate networks of identical security posture; this is akin to building a fence in the middle of a cornfield—separating the corn from more corn.

If identity is the new perimeter, then why bother with the old? Do away with the edge altogether—fill in the castle moat, drop the drawbridge, and focus on fortifying the castle’s keep with elite guards.

This approach is ideologically consistent. But at the same time, it does not make much practical sense since trust and control are two different things. ZTA teaches that networks should not be trusted but their controls can still be used to apply security policy, such as screening out undesirable traffic. An IP address might not be trusted for authentication purposes, but using a negative IP reputation score to inform real-time risk calculations is welcomed.

Despite the mantra that “the perimeter is dead,” edge controls still add a level of value, even in the ZTA world. At a minimum, they provide a level of pest control, keeping the ScRiPt KidDiEz of the world at bay. They also serve as strategic choke points for monitoring and response. But their effectiveness is inversely proportional to their size, hence the movement toward microperimeters and workload-specific segmentation. End-user enclaves like home networks are also served well by perimeters, given their relatively small sizes and fixed geographic locations.

Good Fences Make Good Neighbors

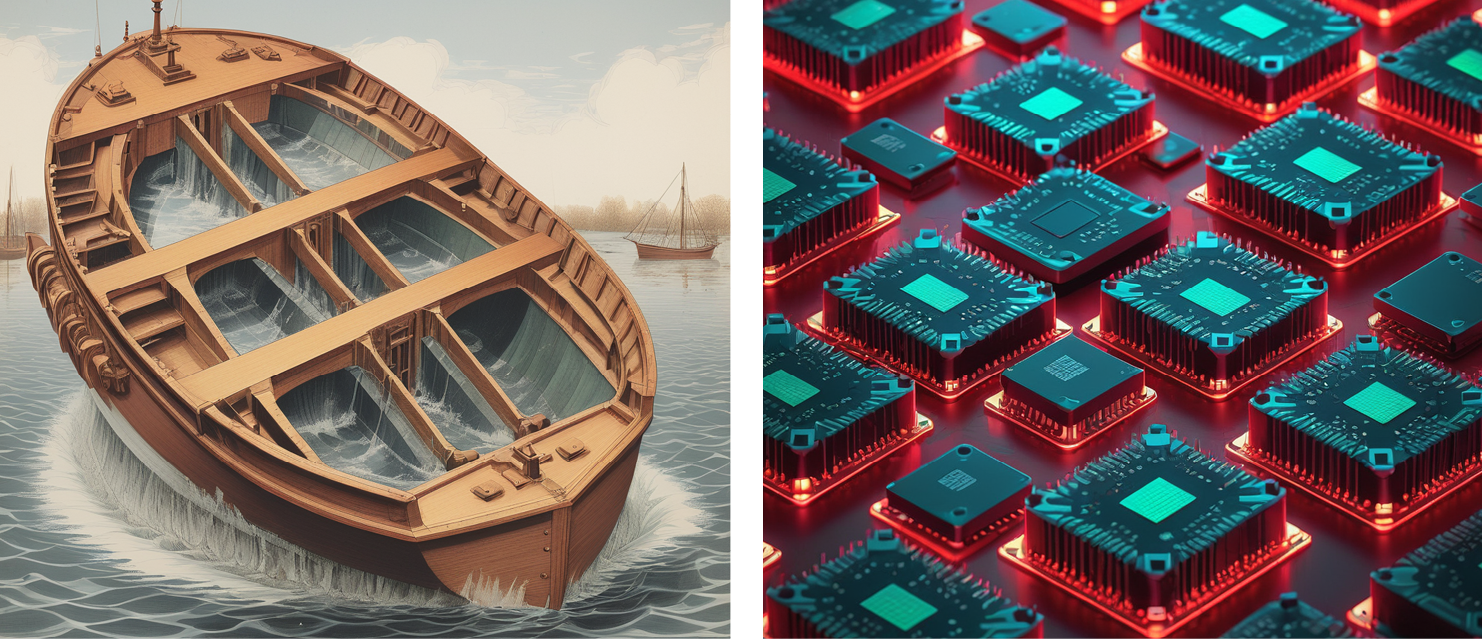

In the ZT paradigm, the new perimeter is not at the edge of a network; it is deep into the interior, at the level of individual workloads. Creating small, manageable perimeters designed to protect a distinct application or set of applications under common administration is the new network security ideal. This is often called microsegmentation. Like watertight compartments of a seafaring ship’s hull, the goal of microsegmentation is to isolate and limit the damage that occurs when any individual compartment is breached (Figure 2).

Figure 2. Ship Compartmentalization Compared With Microsegmentation Isolating Breach (Source: Weinstein et al. [9]).

How segmented should a microsegmented network perimeter be? There are many possibilities, such as the following:

- Aggregate – Network policy could be applied to the entire address space assigned to a workload; perhaps a /22 of IPv4 address space or a /56 of IPv6.

- Subnet – The aggregate could be further subdivided into several subnets as necessary, with a tailored network policy applied to each subnet.

- Server – Network policy could be defined down to the level of individually addressed servers, regardless of subnet or aggregate membership.

- Operating System – Mainly by using host-based firewalls, individual servers could apply network policy to the individual services bound and listening on that server.

- Process – Individual processes on the server (native or containerized) could apply their own network security policies.

Any of these levels could serve as adequate microperimeters. However, they could also be applied in series, yielding a layered approach. So, how many layers are enough, and how many are too many?

“But wait a minute,” someone may say, “I thought ZTA said that all networks are untrusted and that perimeters are passé, and here we are, building more perimeters. What gives?”

Once again, trust factors should not be confused with controls and control objectives. Even though the ship’s hull might have watertight compartments to enhance buoyancy (microsegmentation), the ship cannot do away with carrying lifeboats, fire extinguishers, or communicating with vessel traffic controllers for navigation updates. ZTA can work cohesively with existing controls.

Who Are You? Who Are You Again?

ZTA philosophy displaces networks as trust factors and, in turn, places stronger emphasis on user and device identity. Users and devices establish identity via authentication, typically multifactor authentication, for interactive user authentication and cryptographically strong mechanisms for devices.

ZTA calls for strong authentication to initiate a work session. Optionally, reauthentication can be required in situations where the risk profile of a user or device suddenly changes. But why use a reactive stance? Why not proactively reauthenticate a user or device every hour? Why not every 30 minutes, 10 minutes, single minute, or subsecond?

Clearly, users do not want to be impacted with authentication fatigue—as such, continuous interactive authentication is not an option. But the increasing use of second factors—user and/or device digital certificates installed on devices, hardware-based authenticators like FIDO 2 tokens, and passkey-enabled smartphones—raises the possibility of perpetual authentication. A service endpoint—whether an application or a VPN concentrator or an identity-aware access proxy—could interrogate such authenticators frequently, with no user intervention and negligible resource impact. Failed authentication (or the absence/removal of the authenticators) could have several explanations—it could be an innocuous change, such as loss of network connectivity or the user going to lunch. Or the change might not be innocuous; perhaps a FIDO 2 token was physically removed. Who removed it? Why did they remove it? These occurrences could be used in calculations of contextual risk, which, in turn, could trigger policy-driven responses as needed.

It is true that perpetually challenging a digital certificate or interrogating a hardware token only confirms that those authenticators are still present—the status of the user remains unknown. But this is better than nothing. If nothing else, perpetual authentication can help build a more accurate risk profile for that device; the instant an authentication fails, something has changed.

Speed Dialing 911

The “Assume Breach” tenet of ZTA presumes that the adversary is already in the environment. This places an organization in a state of proactive response—a state of constant vigilance across its people, processes, and technologies. Containment, eradication, and recovery become business-as-usual processes, even if there is no detectable incident at any given time.

Assume Breach is instated regardless of the state of the effectiveness of preventative controls such as “Secure by Design,” threat modeling, code reviews, and other security hygiene best practices. These might include other ZTA tenets like policy compliance, microsegmentation, and continuous verification.

From a maximalist vantage point, an adversary in an organization’s environment can and will use all attack methods, tactics, techniques, and procedures at their disposal, with potentially all exploitation objectives (from reconnaissance, disruption of service, and data exfiltration to malware, ransomware, and Zero-Day exploits) to maximize damage. This, in turn, would imply that defensive security is constantly operating in a state of detecting, containing, and recovering from all possible breaches simultaneously. Exercising all security remediation, recovery technologies, and procedures would result in a self-directed assault on the environment. As the phrase implies, the dose of the medicine makes it a cure or poison.

Not unlike the villagers who got weary of rushing to the wolf boy’s rescue, Security Operations Center (SOC) fatigue is real. It is reported on average that SOC deals with a 20% rate of false positives, with experts spending a third of their time on incidents that do not pose threats to the organization [10, 11]. SOC fatigue results in experts missing the subtle indicators of threat actors’ presence in the environment.

Anomalous behavior from the baseline is not necessarily malicious. A remote login at an unusual hour could simply be a conscientious employee checking in on work during a family vacation. How much security expertise does an organization want to expend on such security events?

The Assume Breach tenet is a mindset that shifts the organization from focusing only on prevention of breaches or remediation after an incident has been detected (adversaries are often detected months after infiltration) toward continuous detection and recovery. However, establishing intelligent, rapid detection, response, containment, and recovery protocols must be measured. Breach response must be tactical, effective, and aligned with the protect surface and risk appetite of the organization. To optimize effectiveness, the following approaches are recommended:

- Prioritize “Protect Surfaces” (which are the smallest possible attack surfaces) for:

- inspecting and logging all traffic before acting,

- continuously monitoring all configuration changes, resource accesses, and network traffic for suspicious activity, and

- establishing full visibility of all activity across all layers from endpoints and the network to enable analytics that can detect suspicious activity.

- Establish security baselines and gather ample contextual data to detect anomalies that flag threat actors from all deviations from the baseline.

- Practice “Table Top” war games and red-teaming exercises.

- Ensure robust detection and response playbooks with proper implementation.

How Much (Zero) Trust in a Zettabyte?

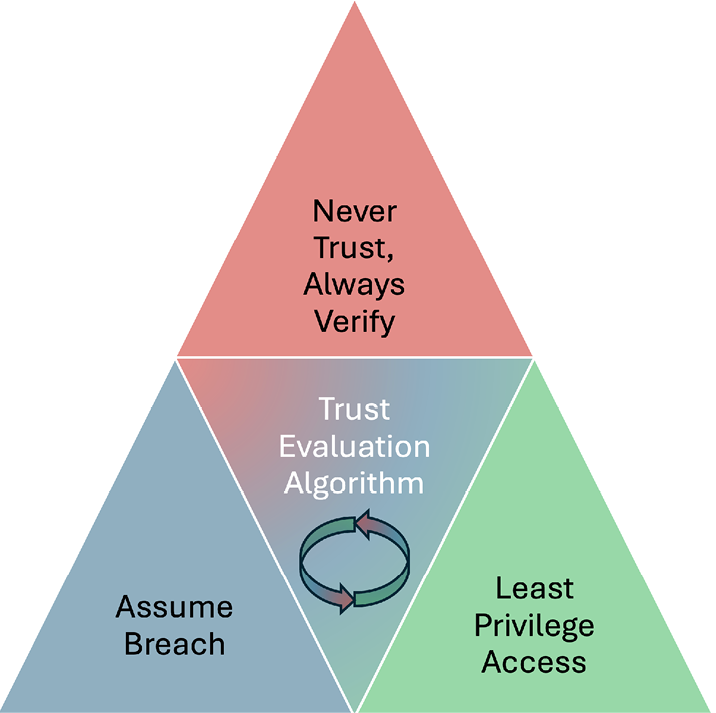

While trust evaluation algorithms (Figure 3) are rarely explicitly called out as a core tenet of ZTA principles, they are central to each of them, and they feed the processes of overall implementation (policy engines), automation, and orchestration.

Figure 3. Trust Evaluation Algorithm (Source: ACT-IAC [12]).

However, this might also be the one Zero Trust parameter where more trust is better and maximum is best. Besides, how could one go wrong with maximum trust? The richer the telemetry, faster the analytics, and more fine-tuned the algorithm, the better the accuracy of risk calculations and context evaluations, thus arriving at reliable trust assertions that underpin ZTA policy decisions.

As the number of computers, servers, devices, and sensors continues to proliferate, they are generating large amounts of telemetry data (metrics, events, logs, and more). According to IDC’s Global DataSphere forecasts, data generated from the core, edge, and endpoints are estimated to reach over 220,000 exabytes by 2026 [13, 14]. A myriad of issues needs to be dealt with in terms of data volumes, storage, normalization, cross correlation, streaming data, analytics, enrichment, privacy protections, and more. The richer the data, the greater the accuracy of the context and risk calculations for an entity. On the counterbalance, the greater the data volumes and associated processing, the more complexity in evaluating context. This gives rise to impedance with latency, lag, and errors in trust computations and, in turn, ZTA implementation.

These inefficiencies will drive trust algorithms toward finding a natural balance between the cost of computation, data storage, ingest, complexity, processing, and normalizing with the value of each datapoint in an algorithm used to assert trust. How much trust is enough will be determined by the risk appetite aligning with an organization’s goals.

Maturity Analysis

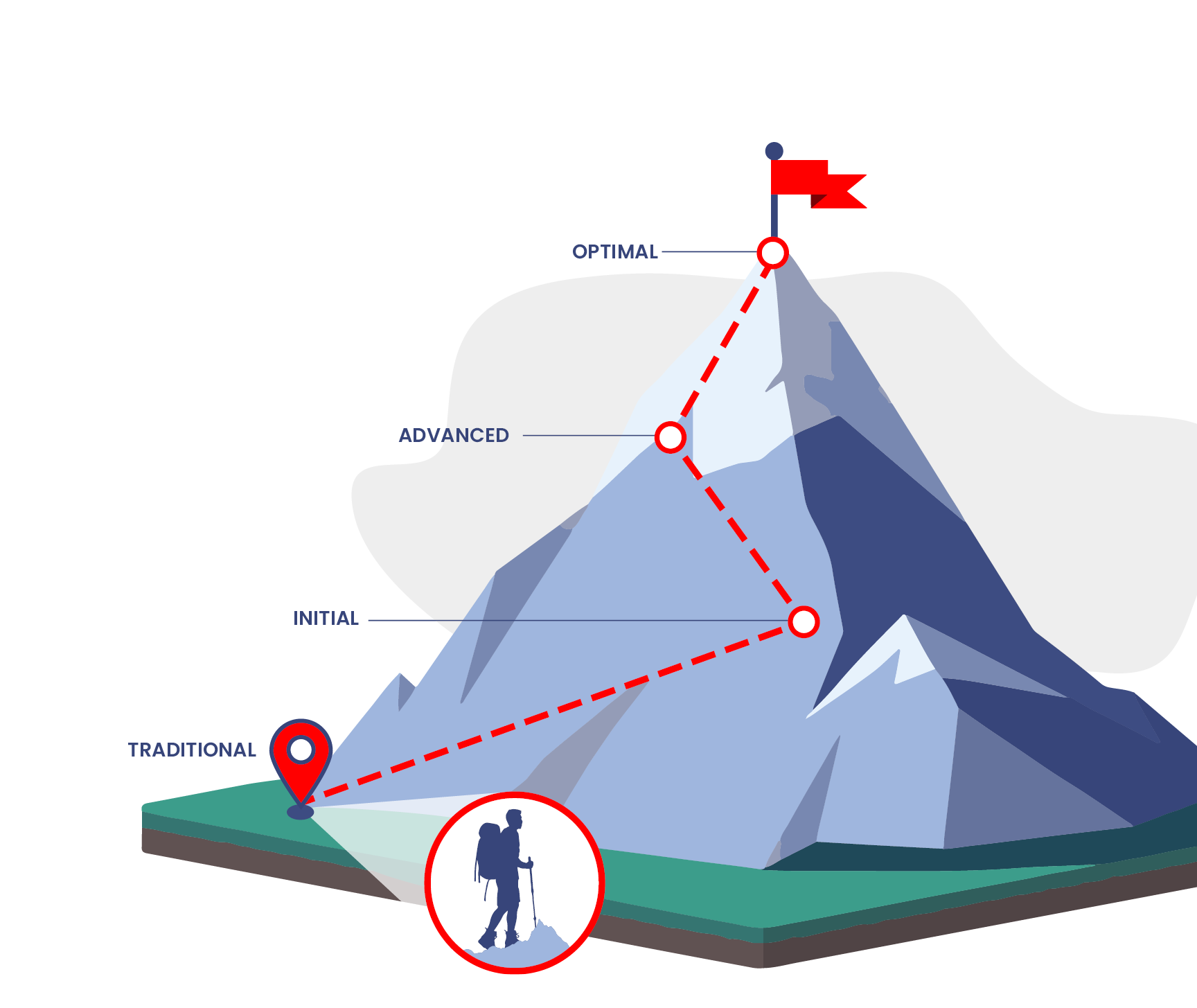

Zero Trust is a journey—not a destination—and the journey is a complex one. If the Zero Trust approach falters, its cybersecurity benefits will significantly degrade [15]. Absolute Zero Trust does not exist and, in a sense, is not achievable for all the reasons discussed. Much of the “Extreme Zero Trust” discussion in this article illustrates this. The laws of diminishing returns are always in force, and Zero Trust is no exception. This is perhaps the main reason why frameworks like CISA’s Zero Trust Maturity model culminate with the “optimal” state and not “absurd,” “excessive,” or “extreme.”

ZTA may dismiss networks as trust factors, but this does not mean that network controls are entirely without value. In fact, many network controls are rolled into basic security hygiene to establish robust security baselines. Robust network tenancy and microsegmentation for applications and workloads are still desired but not unnecessary Protect Surfaces exposed to the internet. Factors like IP reputation scores are needed as part of intelligent risk analytics. Essentially, there is a shift in the way network controls are used in ZTA; however, they are still intrinsically valuable and remain a core part of an Optimal Network pillar.

Authentication is a security virtue. When applied too frequently, it becomes a hinderance to productivity and user acceptance, inevitably leading to workarounds. Depending on the architecture and use cases, it might be advisable to enable hardware-based authenticators to enable seamless polling to ensure continuous context awareness. Change of context or risk profile could trigger any number of policy responses like reauthentication. Essentially, factors optimizing productivity and user experience counterbalance the maximalist options for this tenet. Optimal identity is continuous and not confounding.

Assume Breach is a sensible position but should not succumb to paranoia and paralysis through analysis. If every log message and every signal is interpreted as a breach, the organization has bigger problems. False positives, security expertise expended on signals that do not threaten the organization, and SOC fatigue lead to lower quality of responses and depleted resources during true threats and malicious events. Optimization—knowing and prioritizing Protect Surfaces and efficiently analyzing signals and preparedness—is a must for Assume Breach tenet effectiveness. In the CISA model, “Visibility & Analytics” is depicted as a foundational overlay across the various pillars but is arguably its own pillar.

Lastly, the most precise trust algorithms require more data, decision points, and analysis—all potentially raising complexity in data analytics toward the point of diminishing returns. With data exponentially increasing in environments, keeping trust algorithms as streamlined as possible to arrive at an effective, valid trust decision is perhaps the best outcome for ZTA-centric policy decisions. Interestingly, data sits alone as both a pillar of protection and the asset protected by that pillar, creating a circular maturity dependency.

Through the course of the Zero Trust journey, proper risk management and alignment with technology strategy are essential. For example, organizations may choose to align with CISA’s Zero Trust Maturity model. The risk profiles of those organizations will determine the ZTA measures implemented, and the technology strategy will drive the technical controls across the ZTA pillars (Identity, Devices, Networks, Applications, and Workloads and Data). As organizations journey toward the Optimal stage, they will find that they are at different levels of maturity across each pillar (Figure 4). Typically, it is challenging to advance across all pillars simultaneously. As a result, some pillars will be prioritized over others.

Figure 4. CISA’s ZT Maturity Journey (Source: CISA Cybersecurity Division [2]).

Moreover, since the ZTA tenets have interdependencies, developing one pillar requires constant vigilance in terms of cross-impacting to other pillars (or even other controls within the same pillar). For example, in the Data pillar, if encryption of data in transit (Initial), data at rest (Advanced), and data in use (Optimal) are all achieved, a data loss prevention control of the same pillar might lose visibility into the data and become a ghost control. Alternately, reaching levels of Optimal with encryption in the Data pillar might support Advanced maturity of the Network pillar by creating encrypted network flows.

Ultimately, ZTA maturity—in the CISA context or otherwise—involves a level of restraint. Optimal does not mean everything.

Conclusions

In review, when it comes to ZTA, more is certainly not better. Rather, what can be achieved is evaluating the ZTA tenets to find the right balance, considering an organization’s unique goals, specific technology stack, Protect Surfaces, processes, assets, and culture with its multitude of specifics, that will determine the best-case scenario for each organization.

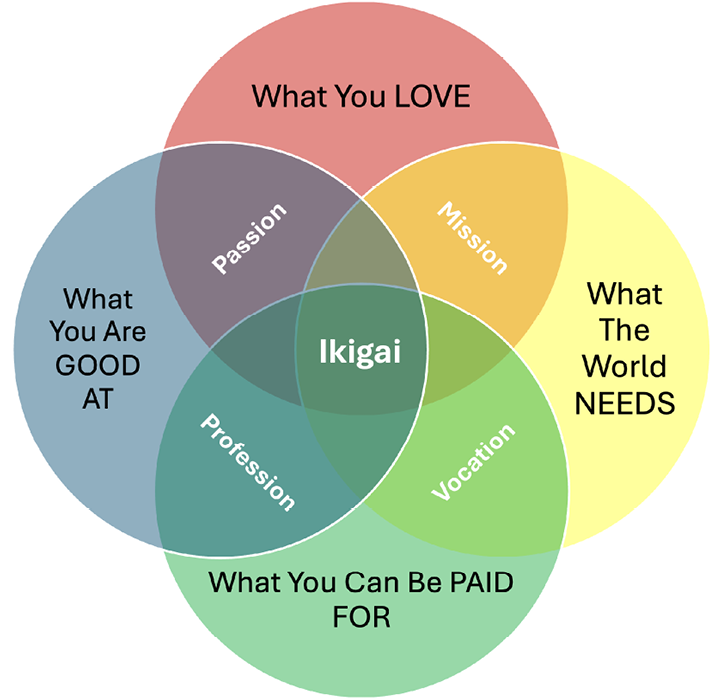

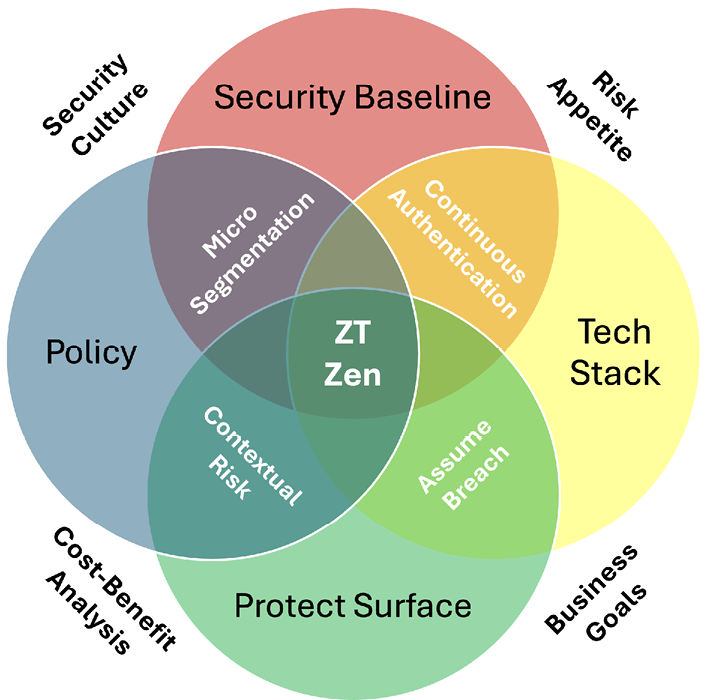

The Japanese concept of ikigai, the “reason for being”—that which gives an individual a sense of purpose—is found at the intersection of a confluence of factors (Figure 5). Similarly, the right measure of Zero Trust (which is not maximalist) is found at the intersection of a multitude of factors. Many of these factors are discussed deeply in publications such as NIST 800-207 and CISA’s Zero Trust Maturity model. The Zero Trust Zen diagram (Figure 6) is rudimentary and for illustrative purposes only. It is not a comprehensive list of all possible factors that determine ZTA. ZTA is much more complex than the simplicity of life depicted in the ikigai diagram (Figure 5). As such, maximalist or extreme ZTA is not viable; in certain ways, it can be detrimental to the intent of Zero Trust principles. More is not necessarily better for the Zero Trust tenets explored. With that, one must ponder, what is your ikigai/what is your ZTA?

Figure 5. Ikigai: “Reason for Being” (Source: Kaplan [16]).

Figure 6. ZT Zen (Source: Kaplan [16]).

Note

Opinions expressed in this article are the authors’ and not necessarily those of their employers.

References

- National Institute of Standards and Technology (NIST) SP 800-207. “Zero Trust Architecture.” https://csrc.nist.gov/pubs/sp/800/207/final, accessed 15 November 2023.

- Cybersecurity and Infrastructure Security Agency (CISA) Cybersecurity Division. “Zero Trust Maturity Model 2.0.” https://www.cisa.gov/sites/default/files/2023-04/zero_trust_maturity_model_v2_508.pdf, accessed November 2023.

- Executive Order 14028. “Improving the Nation’s Cybersecurity.” 86 FR 26633, https://www.whitehouse.gov/briefing-room/presidential-actions/2021/05/12/executive-order-on-improving-the-nations-cybersecurity/, accessed 10 December 2023.

- National Cyber Security Center. “Zero Trust Architecture Design Principles.” https://www.ncsc.gov.uk/collection/zero-trust-architecture, accessed November 2023.

- The Open Group. “Secure Data: Enterprise Information Protection and Control.” https://www.opengroup.org/sites/default/files/contentimages/Consortia/Jericho/documents/COA_EIPC_v1.1.pdf, accessed 23 November 2023.

- Ward, R., and B. Beyer. “BeyondCorp: A New Approach to Enterprise Security.” https://storage.googleapis.com/gweb-research2023-media/pubtools/pdf/43231.pdf, vol. 39, no. 6, pp. 6–11, accessed 23 November 2023.

- Kindervag, J. “Build Security Into Your Network’s DNA: The Zero Trust Network Architecture.” Forrester Research, https://www.forrester.com/report/Build-Security-Into-Your-Networks-DNA-The-Zero-Trust-Network-Architecture/RES57047, accessed 23 November 2023.

- Marsh, S. P. “Formalising Trust as a Computational Concept.” Ph.D. dissertation, University of Stirling, Stirling, Scotland, https://www.cs.stir.ac.uk/~kjt/techreps/pdf/TR133.pdf, accessed 23 November 2023.

- Weinstein, S., C. Zarcone, and S. Zevan. “Zero Trust Security Architecture for The Enterprise.” SCTE Cable-Tec Expo 2022, https://www.nctatechnicalpapers.com/Paper/2022/FTF22_SEC04_Zarcone_3696, accessed 10 February 2024.

- Nadeau, J. “SOCs Spend 32% of the Day on Incidents That Pose No Threat.” Security Intelligence, https://securityintelligence.com/articles/socs-spend-32-percent-day-incidents-pose-no-threat/, accessed 2 February 2024.

- “One-Fifth of Cybersecurity Alerts Are False Positives.” Security Magazine, https://www.securitymagazine.com/articles/97260-one-fifth-of-cybersecurity-alerts-are-false-positives, accessed 5 February 2024.

- ACT-IAC. “Zero Trust Cybersecurity Current Trends.” https://www.actiac.org/system/files/ACT-IAC%20Zero%20Trust%20Project%20Report%2004182019.pdf, accessed 2 February 2024.

- Burgener, E., and J. Rydning. “High Data Growth and Modern Applications Drive New Storage Requirements in Digitally Transformed Enterprises.” IDC, https://www.delltechnologies.com/asset/en-my/products/storage/industry-market/h19267-wp-idc-storage-reqs-digital-enterprise.pdf, accessed February 2024.

- Reinsel, D., J. Gantz, and J. Rydning. “The Digitization of the World From Edge to Core.” IDC, https://www.seagate.com/files/www-content/our-story/trends/files/idc-seagate-dataage-whitepaper.pdf, accessed 4 February 2024.

- National Security Agency. “Embracing a Zero Trust Security Model.” https://media.defense.gov/2021/Feb/25/2002588479/-1/-1/0/CSI_EMBRACING_ZT_SECURITY_MODEL_UOO115131-21.PDF, accessed 19 January 2024.

- Kaplan, O. “Goals-vs.-Systems-and-Finding-Your-Ikigai.” https://slashproject.co/posts/2020/goals-vs-systems-and-finding-your-ikigai/, accessed 20 November 2023.

Biographies

Daksha Bhasker is a principal cybersecurity architect at Microsoft, where she works on M365 cloud security. Throughout her career, she has engaged with the broader security industry to better the state of security architectures of current and emerging technologies. She has over 20 years of experience in the telecommunications service provider industry, specializing in systems security development of complex solutions architectures. Ms. Bhasker holds an M.S. in computer systems engineering from Irkutsk State Technical University and an MBA in ecommerce from the University of New Brunswick, Canada.

Christopher Zarcone is a distinguished engineer at Comcast and a computer scientist specializing in Zero Trust. He has presented at information security conferences and seminars, contributed to the development of industry standards, and has been awarded 10 U.S. patents. He was recently named Engineer of the Year by IEEE’s Philadelphia Section. Mr. Zarcone holds a bachelor’s degree in computer science from Drexel University and a master’s degree in computer science from Rensselaer Polytechnic Institute.